To write good NRQL queries, it helps to understand how our various NRQL clauses and functions work. This doc contains definitions of NRQL clauses and functions, and gives examples of how to use them.

Looking for basic NRQL syntax rules? See How to use NRQL. For a tutorial, see Introductory NRQL tutorial.

Query components

As noted in our basic NRQL syntax doc, every NRQL query will contain a SELECT clause and a FROM clause, with all other clauses being optional. The clause definitions below also contain example NRQL queries.

DELETE keyword

The DELETE keyword in New Relic Query Language (NRQL) is used to remove specific data and attributes within Pipeline Control. This helps manage the data that is ingested into the New Relic Database (NRDB).

The DELETE keyword is applied in NRQL queries to specify which data or attributes should be removed. Here are some examples:

Here're some examples:

Deleting specific metrics:

DELETE FROM MetricWHERE metricName = 'newrelic.goldenmetrics.infra.kubernetes_pod.podScheduled'- Removes metrics with the specified name.

Deleting specific spans:

DELETE FROM Span WHERE appName = 'external-usage-consumer (test-odd-wire)'- Removes spans associated with the specified application.

Deleting specific agent updates:

DELETE FROM AgentUpdate WHERE a = 'b'- Removes agent updates where the attribute

aequalsb.

- Removes agent updates where the attribute

Required clauses

SELECT attribute ...SELECT function(attribute) ...The SELECT specifies what portion of a data type you want to query by specifying an attribute or a function. It's followed by one or more arguments separated by commas. In each argument you can:

- Get the values of all available attributes by using

*as a wildcard. For example:SELECT * FROM Transaction. - Get values associated with a specified attribute or multiple attributes specified in a comma separated list.

- Get aggregated values from specified attributes by selecting an aggregator function.

- Label the results returned in each argument with the

ASclause.

You can also use SELECT with basic math functions.

This query returns the average response time since last week.

SELECT average(duration) FROM PageView SINCE 1 week agoYou can include both the * wildcard and individual attributes, functions, math expressions, and NRQL variables in the same SELECT statement. * may appear at the beginning or end of the SELECT list, and the additional columns selected will appear at the beginning or end of the results table, respectively:

SELECT *, attribute, function(attribute), attribute1 + attribute2 FROM ...WITH attribute1 + attribute2 AS attrSum SELECT attrSum, attribute, function(attribute), * FROM ...This query returns all available PageView attributes with additional columns at the beginning.

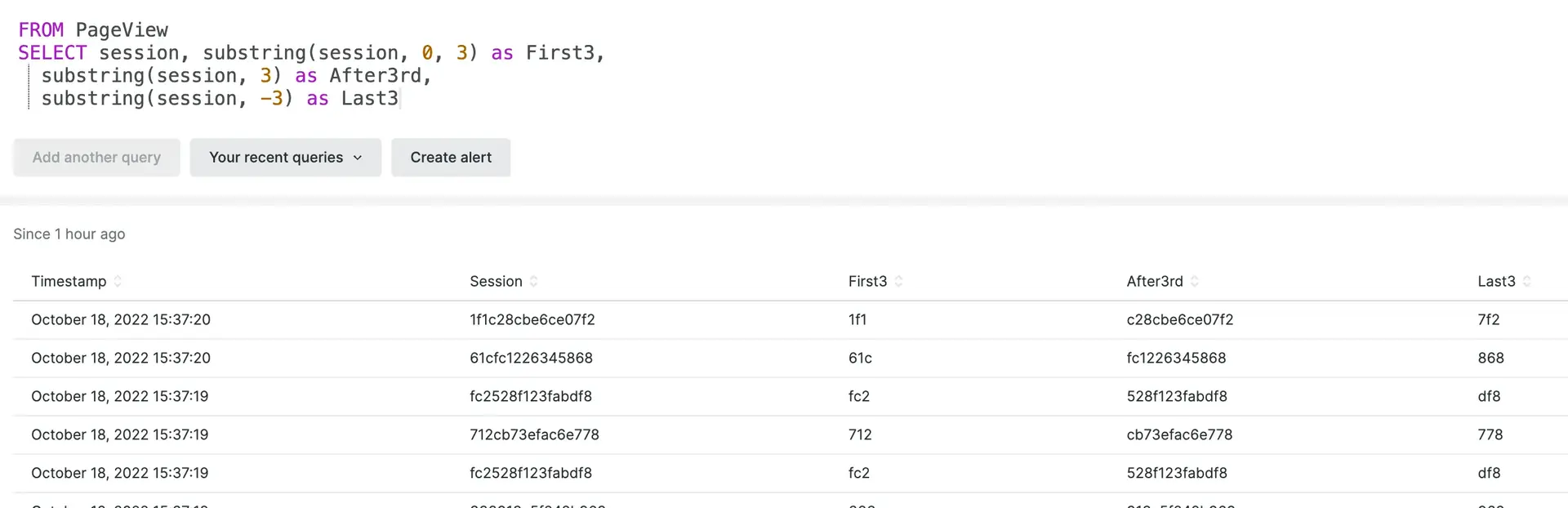

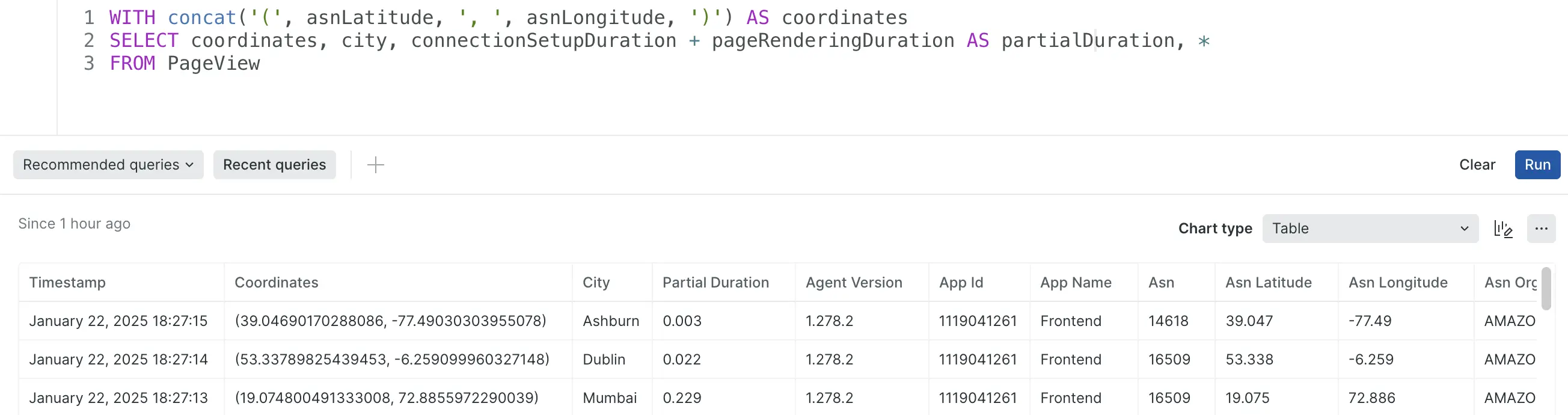

WITH concat('(', asnLatitude, ', ', asnLongitude, ')') AS coordinatesSELECT coordinates, city, connectionSetupDuration + pageRenderingDuration AS partialDuration, *FROM PageView

SELECT * with additional columns example

ヒント

Only non-aggregator functions are supported in the SELECT list along with *.

SELECT ...FROM data type...Use the FROM clause to specify the data type you wish to query. You can start your query with FROM or with SELECT. You can merge values for the same attributes across multiple data types in a comma separated list.

This query returns the count of all APM transactions over the last seven days:

SELECT count(*) FROM Transaction SINCE 7 days agoThis query returns the count of all APM transactions and browser events over the last three days:

SELECT count(*) FROM Transaction, PageView SINCE 3 days agoSee lookup().

Optional clauses

SELECT ...AS 'label'...Use the AS clause to label an attribute, aggregator, step in a funnel, or the result of a math function with a string delimited by single quotes. The label is used in the resulting chart. Note that AS clause labels in time series charts will not be displayed if a FACET clause is used.

This query returns the number of page views per session:

SELECT count(*)/uniqueCount(session) AS 'Pageviews per Session'FROM PageViewThis query returns a count of people who have visited both the main page and the careers page of a site over the past week:

SELECT funnel(SESSION, WHERE name = 'Controller/about/main' AS 'Step 1', WHERE name = 'Controller/about/careers' AS 'Step 2')FROM PageView SINCE 1 week agoSELECT ... (SINCE or UNTIL) (integer units) AGOCOMPARE WITH (integer units) AGO...Use the COMPARE WITH clause to compare the values for two different time ranges.

COMPARE WITH requires a SINCE or UNTIL statement. The time specified by COMPARE WITH is relative to the time specified by SINCE or UNTIL. For example, SINCE 1 day ago COMPARE WITH 1 day ago compares yesterday with the day before.

The time range for theCOMPARE WITH value is always the same as that specified by SINCE or UNTIL. For example, SINCE 2 hours ago COMPARE WITH 4 hours ago might compare 3:00pm through 5:00pm against 11:00am through 1:00pm.

COMPARE WITH can be formatted as either a line chart or a billboard:

- With

TIMESERIES,COMPARE WITHcreates a line chart with the comparison mapped over time. - Without

TIMESERIES,COMPARE WITHgenerates a billboard with the current value and the percent change from theCOMPARE WITHvalue.

Example: This query returns data as a line chart showing the 95th percentile for the past week compared to the same range one week ago. First as a single value, then as a line chart.

SELECT percentile(duration, 95) FROM PageViewSINCE 1 week ago COMPARE WITH 1 week AGO

SELECT percentile(duration, 95) FROM PageViewSINCE 1 week ago COMPARE WITH 1 week AGO TIMESERIES AUTO重要

For FACET queries using COMPARE WITH, the facets in the result are selected based on the time range specified using SINCE and UNTIL and not the prior time range being compared. The results of a FACET query for the prior time range alone may include a different set of facets.

You can use this clause with these data types:

TransactionTransactionErrorCustom events reported via agent APIs

The purpose of

EXTRAPOLATEis to mathematically compensate for the effects of APM agent sampling of event data so that query results more closely represent the total activity in your system.This clause will be useful when a APM agent reports so many events that it often passes its harvest cycle reporting limits. When that occurs, the agent begins to sample events.

When

EXTRAPOLATEis used in a NRQL query that supports its use, the ratio between the reported events and the total events is used to extrapolate a close approximation of the total unsampled data. When it is used in a NRQL query that doesn’t support its use or that hasn’t used sampled data, it has no effect.重要

Note that

EXTRAPOLATEis most useful for homogenous data (like throughput or error rate). It's not effective when attempting to extrapolate a count of distinct things (likeuniqueCount()oruniques()).This clause works only with NRQL queries that use one of the following aggregator functions:

apdexaveragecounthistogramsumpercentage(if function it takes as an argument supportsEXTRAPOLATE)rate(if function it takes as an argument supportsEXTRAPOLATE)stddevA query that will show the extrapolated throughput of a service named

interestingApplication.SELECT count(*) FROM Transaction WHERE appName = 'interestingApplication'SINCE 60 minutes ago EXTRAPOLATEA query that will show the extrapolated throughput of a service named

interestingApplicationby transaction name, displayed as a time series.SELECT count(*) FROM Transaction WHERE appName = 'interestingApplication'SINCE 60 minutes ago FACET name TIMESERIES 1 minute EXTRAPOLATE

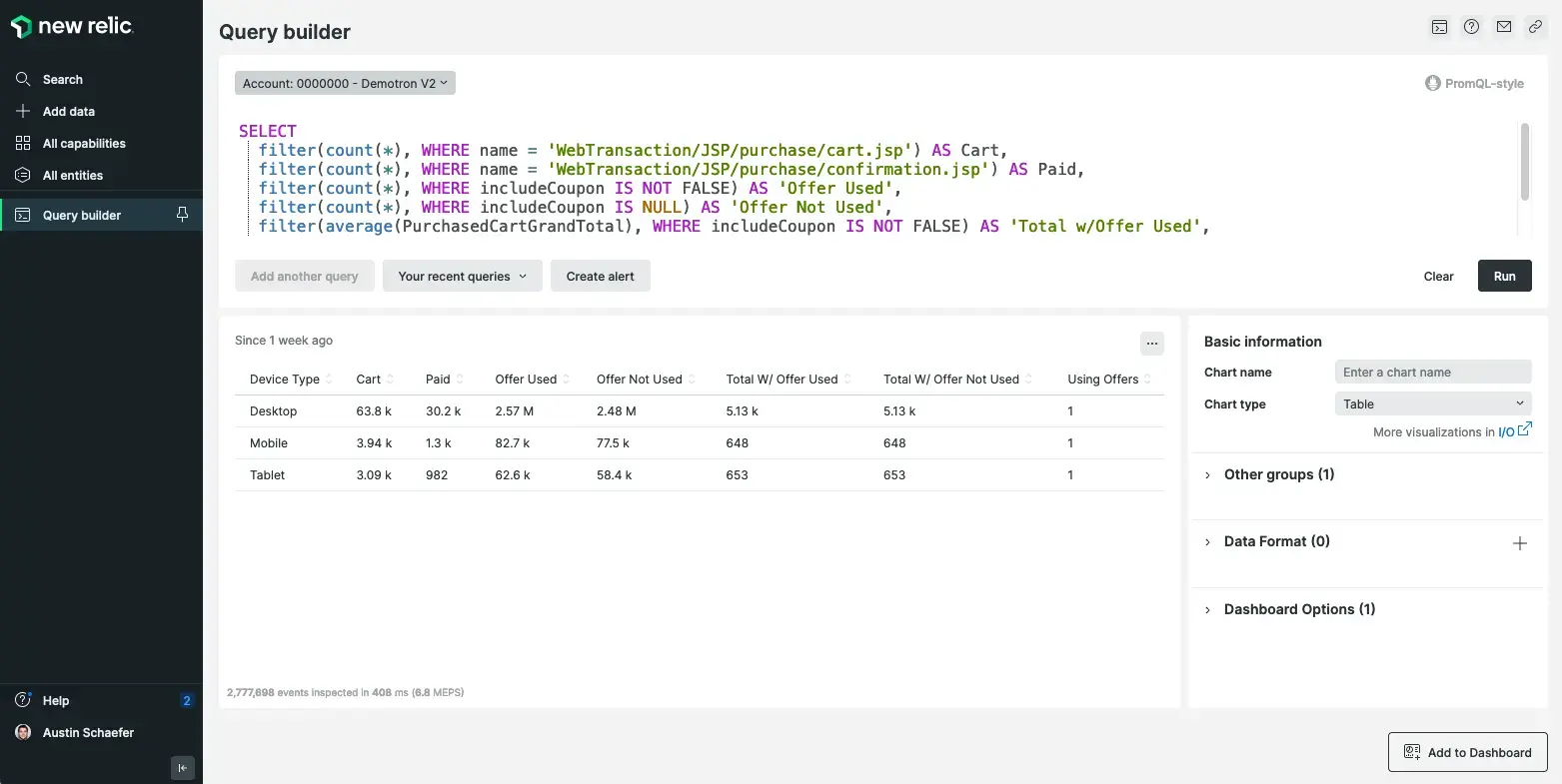

SELECT ...FACET attribute...Use FACET to separate and group your results by attribute values. For example, you could FACET your PageView data by deviceType to figure out what percentage of your traffic comes from mobile, tablet, and desktop devices.

Use the LIMIT clause to specify how many facets appear (default is 10). For more complex grouping, use FACET CASES. FACET clauses support up to five attributes, separated by commas.

The facets are sorted in descending order by the first field you provide in the SELECT clause. If you are faceting on attributes with more than 5,000 unique values, a subset of facet values is selected and sorted according to the query type. Note that if a time series chart returns no data (NRQL matches no matching data, invalid NRQL, etc.) then it will only show a flat line with the label matching the first table in the FROM clause.

When selecting min(), max(), percentile(), average() or count(), FACET uses those functions to determine how facets are picked and sorted. When selecting any other function, FACET uses the frequency of the attribute you are faceting on to determine how facets are picked and sorted.

This query shows cities with the highest pageview counts. This query uses the total number of pageviews per city to determine how facets are picked and ordered.

SELECT count(*) FROM PageView FACET cityThis query shows the cities that access the highest number of unique URLs. This query uses the total number of times a particular city appears in the results to determine how facets are picked and ordered.

SELECT uniqueCount(pageUrl) FROM PageView FACET cityAdvanced segmentation and cohort analysis allow you to facet on bucket functions to more effectively break out your data.

Cohort analysis is a way to group results together based on timestamps. You can separate them into buckets that cover a specified range of dates and times.

重要

When using functions to aggregate attribute values, it's important the attribute being aggregated in the first function of your query contains non-null values. Facets will only be chosen for rows which contain a non-null value for the attribute in the first function.

Example:

FROM Event SELECT average(attribute) FACET nameNames will only be chosen from rows where attribute is not null.

To check if the attribute you're using in your function contains non-null values, run the following query:

FROM Event SELECT attribute, name WHERE attribute IS NOT NULLUse FACET ... AS to name facets using the AS keyword in queries. This clause is helpful for adding clearer or simplified names for facets in your results. It can also be used to rename facets in nested aggregation queries.

FACET ... AS queries will change the facet names in results (when they appear as headers in tables, for example), but not the actual facet names themselves.

FROM Transaction SELECT count(*) FACET response.headers.contentType AS 'content type'SELECT ...FACET CASES ( WHERE attribute operator value, WHERE attribute operator value, ...)...Use FACET CASES to break out your data by more complex conditions than possible with FACET. Separate multiple conditions with a comma ,. For example, you could query your PageView data and FACET CASES into categories like less than 1 second, from 1 to 10 seconds, and greater than 10 seconds. You can combine multiple attributes within your cases, and label the cases with the AS selector. Data points will be added to at most one facet case, the first facet case that they match.

You may also use a time function with your attribute, and you can use the OR operator to facet results that don't match any of your specified cases.

SELECT count(*) FROM PageView FACET CASES ( WHERE duration < 1, WHERE duration > 1 AND duration < 10, WHERE duration > 10)This example groups results into one bucket where the transaction name contains login, and another where the URL contains login and a custom attribute indicates that the user was a paid user:

SELECT count(*) FROM Transaction FACET CASES ( WHERE name LIKE '%login%', WHERE name LIKE '%feature%' AND customer_type = 'Paid')This example uses the AS selector to give your results a human-readable name:

SELECT count(*) FROM Transaction FACET CASES ( WHERE name LIKE '%login%' AS 'Total Logins', WHERE name LIKE '%feature%' AND customer_type = 'Paid' AS 'Feature Visits from Paid Users')This example uses the OR operator to facet results that didn't match any of your cases:

SELECT count(*) FROM Transaction FACET CASES ( WHERE name LIKE '%login%', WHERE name LIKE '%feature%' AND customer_type = 'Paid') OR nameIn NRQL, the default is for the first aggregation in the SELECT clause to guide the selection of facets in a query. FACET ... ORDER BY allows you to override this default behavior by adding an aggregate function with the ORDER BY modifier to specify how facets are selected. Specifically, the clause will override the priority by which facets are chosen to be in the final result before being limited by the LIMIT clause. This clause can be used in querying but not for alerts or streaming.

This example shows how to use FACET ... ORDER BY to find the average durations of app transactions, showing the top 10 (default limit) highest durations by apps which have the highest response size. In this case, if FACET ... ORDER BY is not used, the query results will instead show the top 10 by highest durations, with response size being irrelevant to the app selection.

FROM Transaction SELECT average(duration) TIMESERIES FACET appName ORDER BY max(responseSize)Keep in mind that if you use the FACET ... ORDER BY clause, you can't change the sort order by adding the ASC and DESC modifiers. By default, this clause uses DESC.

ヒント

Because the operations are performed before the LIMIT clause is applied, FACET ... ORDER BY does not impact the sort of the final query results, which will be particularly noticeable in the results for non-time series queries.

重要

The ORDER BY modifier in this case works differently than the ORDER BY clause. When parsing queries that follow the format FACET attribute1 ORDER BY attribute2, New Relic will read these as FACET ... ORDER BY queries, but only if ORDER BY appears immediately after FACET. Otherwise ORDER BY will be interpreted by New Relic as a clause.

Use the JOIN clause to combine data from one event type with the results of a subquery based on a common attribute or key.

FROM Event [INNER|LEFT] JOIN (SELECT... FROM...) ON [key =] key SELECT ...There are a few simple rules for subquery joins:

- The

JOINclause must always follow immediately after theFROMclause. - Prefixing a join type (

INNERorLEFT) is optional. When omitted, the join type defaults toINNER. - Parenthesis containing a subquery must immediately follow

JOIN. - The

ONclause must immediately follow the subquery.

Join types | |

|---|---|

| The result will only include values from the outer query that have a matching value in the results of the joined subquery. This is the default join type. |

| The result will include events from the outer query that do not have a match from the joined subquery. |

Join | |

|---|---|

| Defines the key values to compare in the subquery and the outer query. The only comparison operator allowed is equality.

|

| This is an abbreviated syntax for when the key identifier is the same in both contexts. It is equivalent to |

Restrictions and limitations to consider:

- The joined subquery will continue to have a default

LIMITof 10, with a maximumLIMITof 5,000. Note that the outer query'sLIMITdoes not affect the inner query. - The use of

TIMESERIESin the joined subquery is not supported. If your outer query usesTIMESERIES, keep in mind that the joined subquery will provide a single result for the full query timespan. - Like all subqueries, joined subqueries cannot be used in alert conditions.

- While

SELECT *is supported in the parent query, it is not supported in the joined subquery. - The cardinality of the join is limited to 1:100, meaning a single join key cannot map to more than one hundred rows in the subquery result.

For an in depth look at the JOIN clause, please see the

NRQL subquery joins

tutorial.

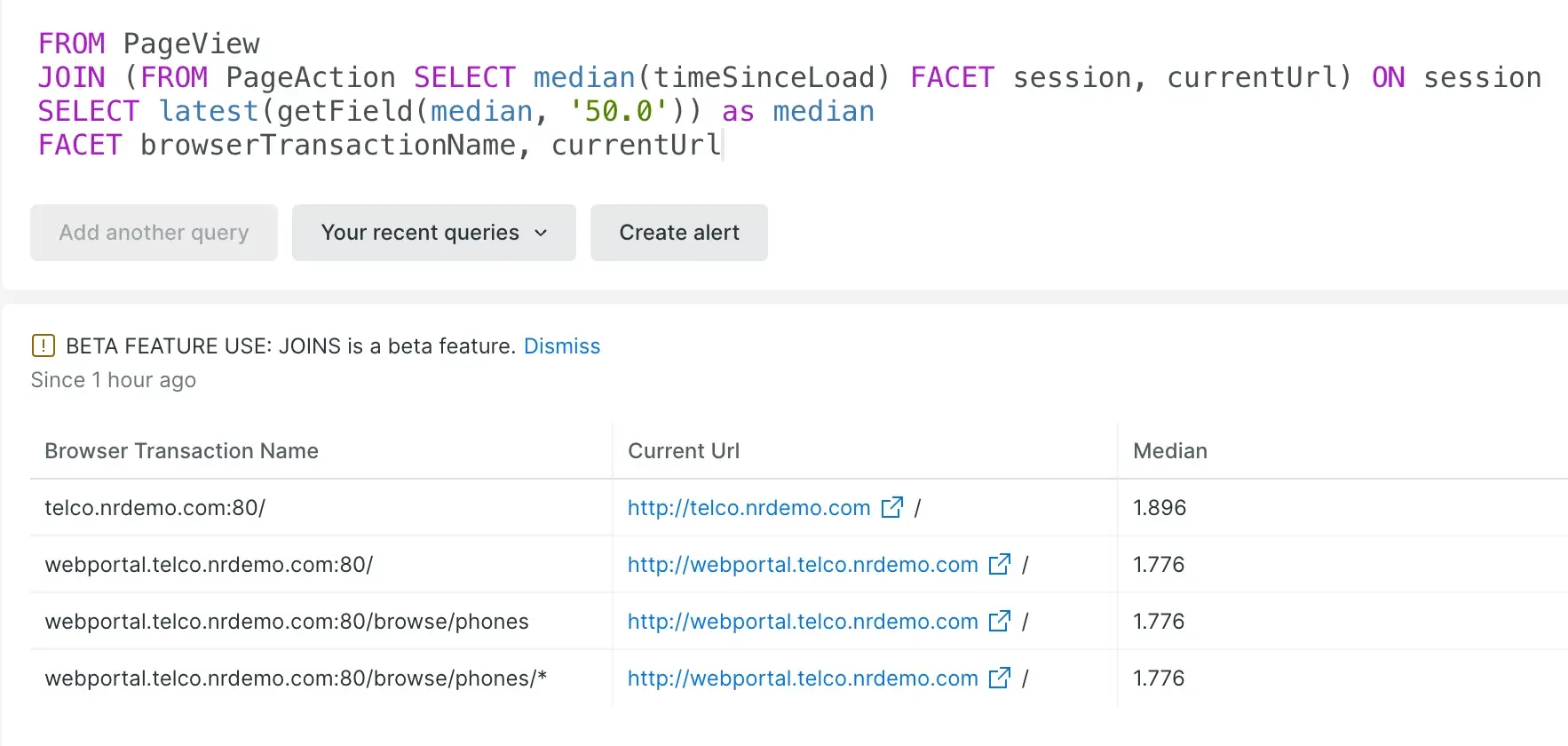

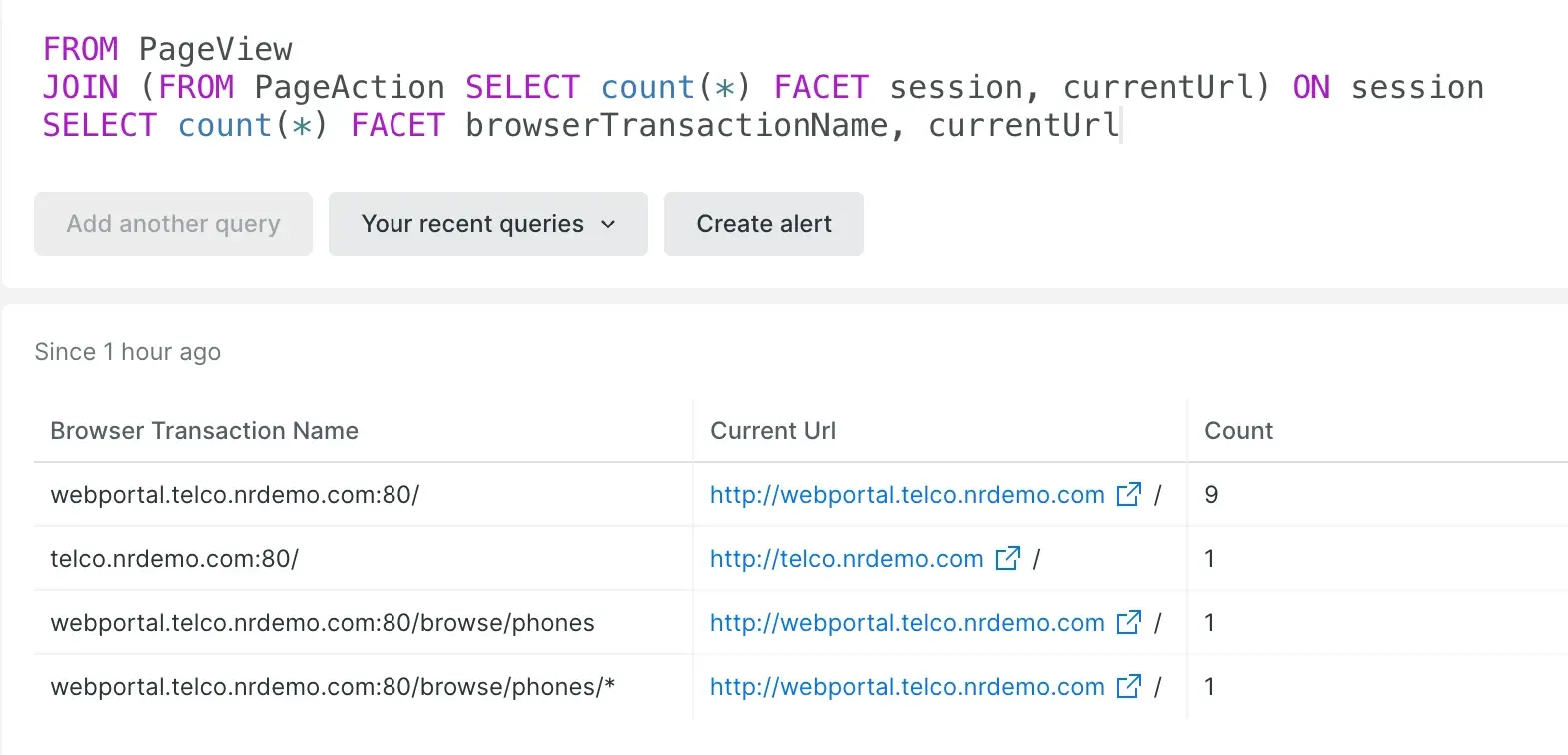

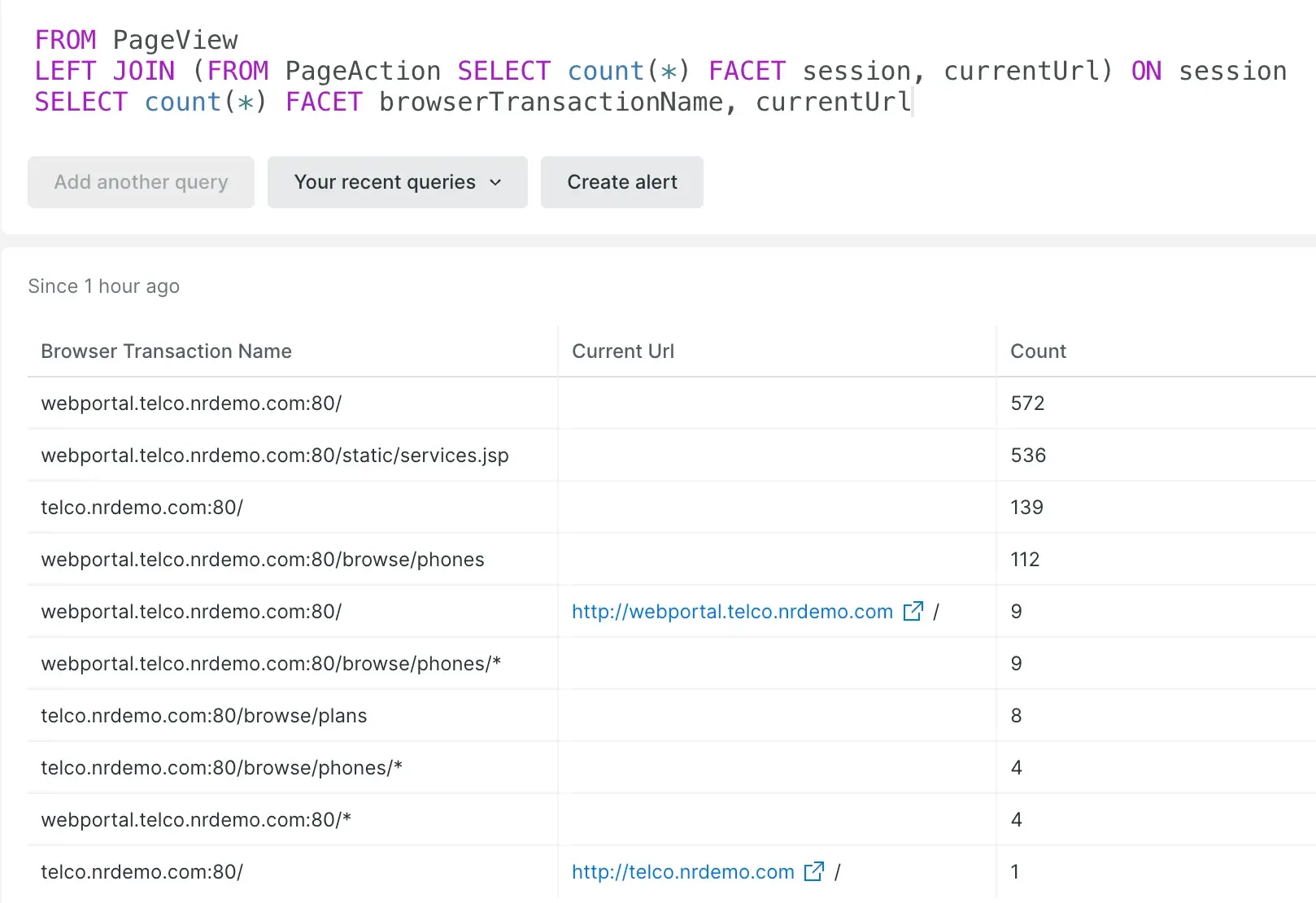

This query finds the count of events faceted by browserTransactionName from the

PageView event type, and then by currentUrl from the PageAction event type. This joins

the two event types based on common session attribute values.

FROM PageViewJOIN ( FROM PageAction SELECT count(*) FACET session, currentUrl) ON sessionSELECT count(*) FACET browserTransactionName, currentUrl

Faceted INNER JOIN example

This example queries the same data as the faceted INNER JOIN

example, but as a LEFT JOIN query, the results include items

in the PageView table that do not have matching session values in the results of the

PageAction subquery.

FROM PageViewLEFT JOIN ( FROM PageAction SELECT count(*) FACET session, currentUrl) ON sessionSELECT count(*) FACET browserTransactionName, currentUrl

Faceted LEFT JOIN example

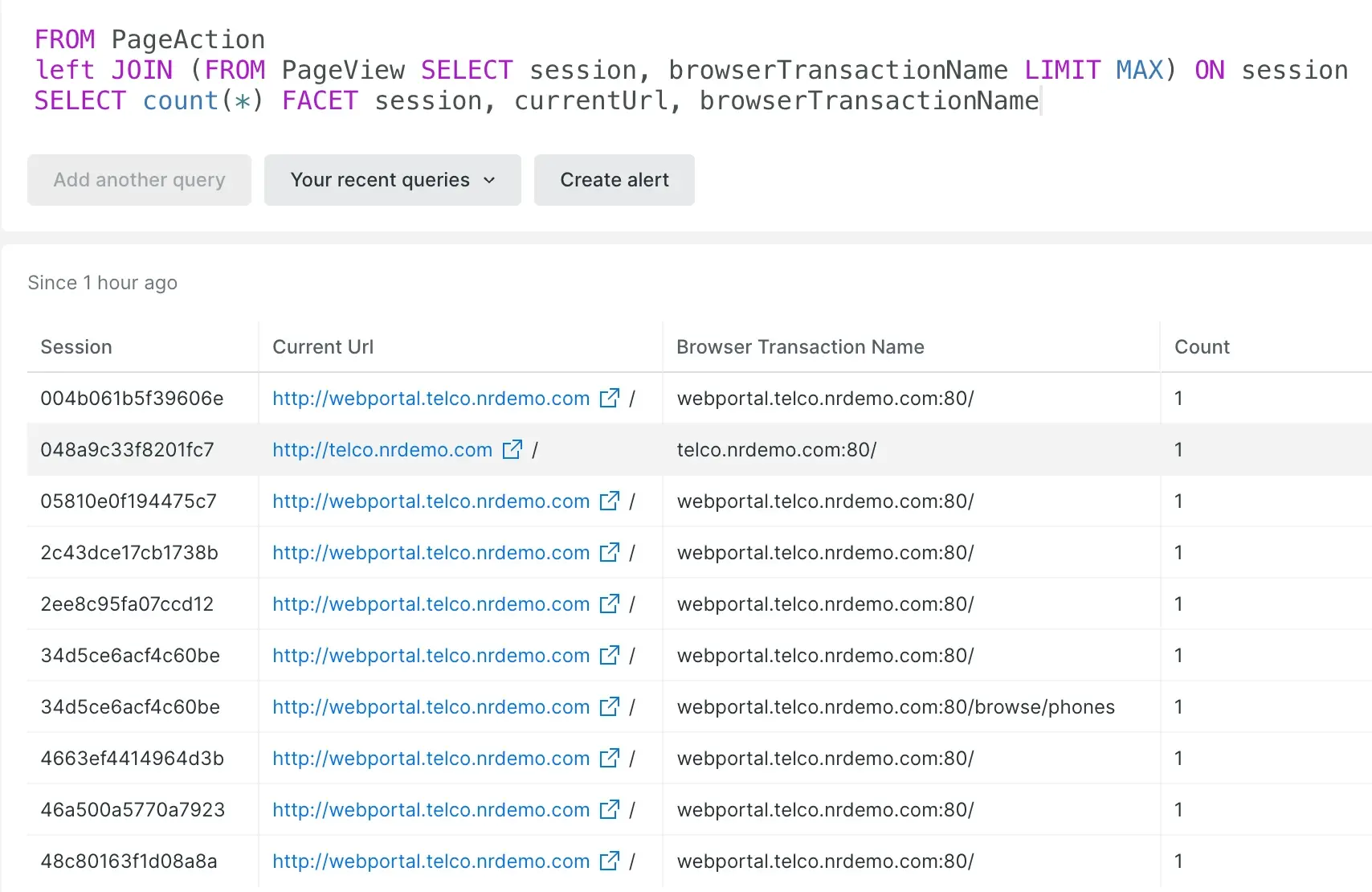

Here we are performing an unaggregated, row-wise subquery, with the outer

query finding the count of events faceted by currentUrl from the PageAction

event type, and then by browserTransactionNamed from the PageView event type.

This joins the two event types based on common session attribute values.

Note that the session value 34d5ce6acf4c60be has two browserTransactionName values from

the subquery's PageView event type, adding additional rows to the result.

FROM PageActionLEFT JOIN ( FROM PageView SELECT session, browserTransactionName LIMIT MAX) ON sessionSELECT count(*) FACET session, currentUrl, browserTransactionName LIMIT MAX

Unaggregated INNER JOIN example

SELECT ...LIMIT count...Use the LIMIT clause to control the maximum number of facet values returned by FACET queries or the maximum number of items returned by SELECT * queries. This clause takes a single integer value as an argument. If the LIMIT clause is not specified, or no value is provided, the limit defaults to 10 for FACET queries and 100 in the case of SELECT * queries.

The maximum allowed value for the LIMIT clause is 5,000. Queries can use the LIMIT MAX clause instead of a specific value, which automatically defaults to the current maximum value. You can use this if you always want to post the maximum number of results, even if it changes in the future. If you want your query's behavior to remain unchanged, specify an explicit value instead of using LIMIT MAX.

This query shows the top 20 countries by session count and provides 95th percentile of response time for each country for Windows users only.

SELECT uniqueCount(session), percentile(duration, 95)FROM PageView WHERE userAgentOS = 'Windows'FACET countryCode LIMIT 20 SINCE YESTERDAYSELECT ...LIMIT count OFFSET count...Use the OFFSET clause with LIMIT to control the portion of rows returned by SELECT * or SELECT column queries. Like the LIMIT clause, OFFSET takes a single integer value as an argument. OFFSET sets the number of rows to be skipped before the selected rows of your query are returned. This is constrained by LIMIT.

OFFSET rows are skipped starting from the most recent record.

For example, the query SELECT interestingValue FROM Minute_Report LIMIT 5 OFFSET 1 returns the last 5 values from Minute_Report except for the most recent one.

The ORDER BY clause allows you to specify how you want to sort your query results in queries that select event attributes by row.

This query orders two specific transaction attributes by duration.

FROM Transaction SELECT appName, duration ORDER BY durationThe default sort order is ascending, but this can be changed by adding the ASC or DESC modifiers.

This query orders all transaction attributes by duration in descending order.

FROM Transaction SELECT * ORDER BY duration DESC重要

The ORDER BY clause does not apply to FACET queries. It should not be confused with the FACET ... ORDER BY clause, which guides facet selection. For more information, see FACET ... ORDER BY.

FROM Transaction SELECT count(*) WHERE error IS TRUE TIMESERIES PREDICT...With the PREDICT clause, you can add forecasts of future data trends into line charts based on historical data. Consider the following points when using this clause:

- Applies only to queries with a

TIMESERIESclause. - Uses the

TIMESERIES <time period>as the interval for predicted data points. - Does not support metric timeslice data while in Public Preview.

重要

The PREDICT clause cannot be used with the COMPARE WITH clause or nested aggregations inside subqueries.

To learn more about how and when you can use PREDICT, refer to NRQL Predictions.

SHOW EVENT TYPES...SHOW EVENT TYPES will return a list of all the data types present in your account for a specific time range. It is used as the first clause in a query instead of SELECT.

重要

In this context, "event types" refers to the data types you can access with a NRQL query.

This query will return all the data types present over the past day:

SHOW EVENT TYPES SINCE 1 day agoSELECT ...SINCE [numerical units AGO | phrase]...The default value is 1 hour ago.

Use the SINCE clause to define the inclusive beginning of a time range for the returned data. You can specify a time zone for the query but not for the results. NRQL results are based on your system time.

When using NRQL, you can set a UTC timestamp, a relative time, or a DateTime string. See Specifying a time.

See also:

The SLIDE BY clause supports a feature known as sliding windows. With sliding windows,SLIDE BY data is gathered into "windows" of time that overlap with each other. These windows can help to smooth out line graphs with a lot of variation in cases where the rolling aggregate (such as a rolling mean) is more important than aggregates from narrow windows of time.

To use SLIDE BY, place it in a query after the TIMESERIES clause. For example, this query pulls data in 5-minute windows with a 1-minute SLIDE BY interval, meaning that each window lasts 5 minutes, but window 1 starts at 0 minutes, window 2 starts at 1 minute, window 3 starts at 2 minutes, and so on.

SELECT average(duration) FROM Transaction TIMESERIES 5 minutes SLIDE BY 1 minuteTo learn more about how and when you can use SLIDE BY, see Create smoother charts with sliding windows. Or, watch this short video (approx. 3:20 minutes).

You can use sliding windows in combination with MAX or AUTO. However, MAX or AUTO may not be placed between TIMESERIES and SLIDE BY.

This query will automatically decide a SLIDE BY window interval.

SELECT average(duration) FROM Transaction TIMESERIES 5 minutes SLIDE BY AUTOThis query will set the SLIDE BY window to the maximum interval granularity.

SELECT average(duration) FROM Transaction TIMESERIES 5 minutes SLIDE BY MAX重要

The SLIDE BY value as determined by AUTO or MAX can produce a step interval greater than the window size, which can cause gaps and unexpected results.

SELECT ...TIMESERIES integer units...Use the TIMESERIES clause to return data as a time series broken out by a specified period of time. Since TIMESERIES is used to trigger certain charts, there is no default value.

To indicate the time range, use integer units. For example:

TIMESERIES 1 minuteTIMESERIES 30 minutesTIMESERIES 1 hourTIMESERIES 30 seconds

TIMESERIES can be combined with arguments such as MAX, AUTO, and SLIDE BY to further tailor query results, as shown in the examples below.

重要

For functions such as average() or percentile(), a large aggregation window can have a significant smoothing effect on outliers. This is true whether or not the query makes use of sliding windows.

The value provided indicates the units used to break out the graph. For example, to present a one-day graph showing 30 minute increments:

SELECT ... SINCE 1 day AGO TIMESERIES 30 minutesTIMESERIES can also be set to AUTO, which will divide your graph into a reasonable number of divisions. For example, a daily chart will be divided into 30 minute intervals and a weekly chart will be divided into 6 hour intervals.

This query returns data as a line chart showing the 50th and 90th percentile of client-side transaction time for one week with a data point every 6 hours.

SELECT average(duration), percentile(duration, 50, 90)FROM PageView SINCE 1 week AGO TIMESERIES AUTOYou can set TIMESERIES to MAX, which will automatically adjust your time window to the maximum number of intervals allowed for a given time period. This allows you to update your time windows without having to manually update your TIMESERIES buckets and ensures your time window is being split into the peak number of intervals allowed. The maximum number of TIMESERIES buckets that will be returned is 366.

For example, the following query creates 4-minute intervals, which is the ceiling for a daily chart.

SELECT average(duration) FROM Transaction SINCE 1 day ago TIMESERIES MAXSELECT ...UNTIL integer units AGO...Use the UNTIL clause to define the end of the time range to query. The value is exclusive, meaning the time range will go to the specified instant in time, but not include it.

The default value is NOW. Only use UNTIL to specify an end point other than the default.

See also:

Use the WHERE clause to filter results. NRQL returns the results that fulfill the condition(s) you specify in the clause.

SELECT function(attribute) ...WHERE attribute [operator 'value' | IN ('value' [, 'value']) | IS [NOT] NULL ][AND|OR ...]...- If you specify more than one condition, separate the conditions by the operators

ANDorOR.

Operators that the | Description |

|---|---|

| NRQL accepts standard comparison operators. Example: For attributes with boolean values, use |

| Used to define an intersection of two conditions. |

| Used to define a union of two conditions. |

| Determines if an attribute has a null value. |

| Determines if an attribute does not have a null value. |

| Determines if an attribute has a boolean value of |

| Determines if an attribute has a boolean value of |

| Determines if the string value of an attribute is in a specified set. Using this method yields better performance than stringing together multiple Example: |

| Determines if the string value of an attribute is not in a specified set. Using this method yields better performance than stringing together multiple Values must be in parentheses, separated by commas. For example: |

| Determines if an attribute contains a specified sub-string. The string argument for the Keep the following in mind:

|

| Determines if an attribute does not contain a specified sub-string. |

| Determines if an attribute contains a specified Regex sub-string. Uses RE2 syntax. Examples:

|

| Determines if an attribute does not contain a specified Regex sub-string. Uses RE2 syntax. |

This query returns the browser response time for pages with checkout in the URL for Safari users in the United States and Canada over the past 24 hours.

SELECT histogram(duration, 50, 20) FROM PageViewWHERE countryCode IN ('CA', 'US') AND userAgentName = 'Safari' AND pageUrl LIKE '%checkout%'SINCE 1 day agoFor information on querying metric data, see Query metrics.

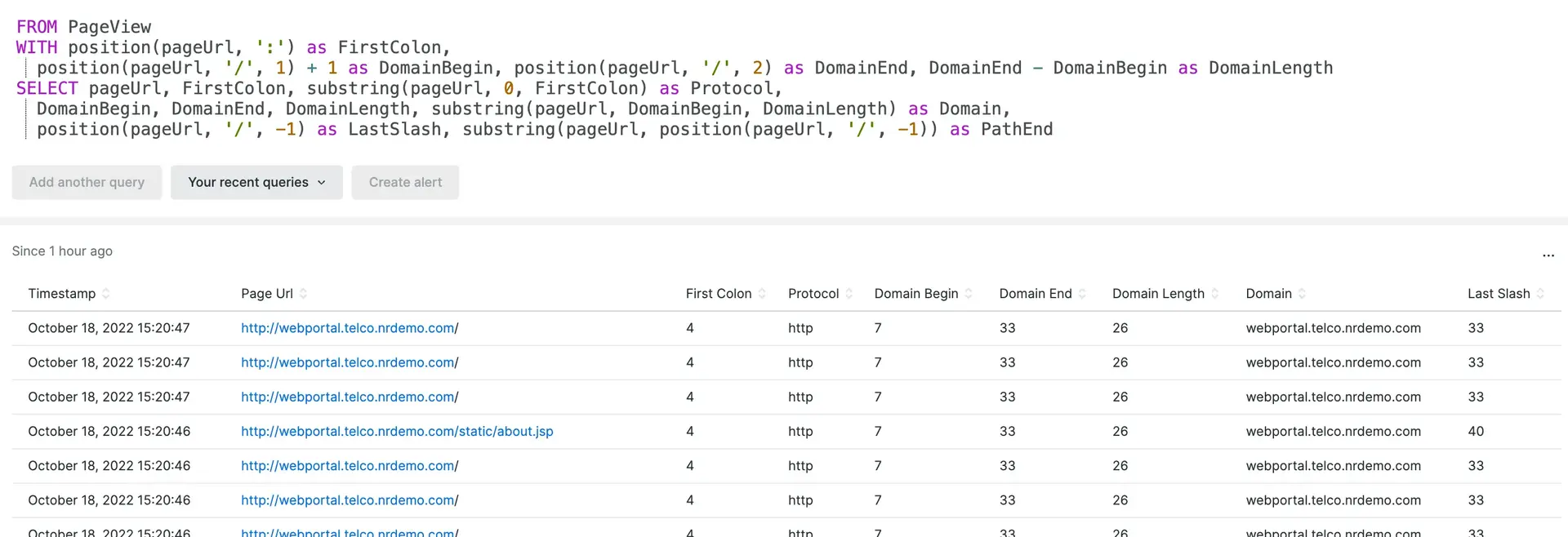

FROM ...WITH function(attribute) AS varSELECT var...Use the WITH ... AS clause to define NRQL variables to store values as variables that can be referenced anywhere in the query. Some rules and tips:

- The

WITH ... ASclause can go before, in between, or directly after theFROMorSELECTclause - Only row-wise functions (for example,

capture()) can be set as a variable. Aggregator functions, such asaverage(), are not supported. - Only one

WITHcan be used, but you can use multiple NRQL variables as long as they're separated by a comma. - If a defined NRQL variable uses the same name as an existing attribute, the variable will take precedence.

- Variable names cannot include the

%symbol.

重要

Events to metrics rules don't support the WITH ... AS clause in NRQL queries.

Here are some example queries:

FROM TransactionWITH duration * 1000 AS millisecSELECT millisecFROM LogWITH aparse(message, '%itemId":"*","unitPrice":*}%') AS (itemId, unitPrice)SELECT itemId, unitPriceLearn more about anchor parse (aparse()).

In this example a NRQL variable, unitPrice, is used to create another variable, unitPriceNum, converting the extracted string into a number.

The variables are then used in the SELECT, WHERE and FACET clauses.

FROM LogWITH aparse(message, '%itemId":"*","unitPrice":*}%') AS (itemId, unitPrice), numeric(unitPrice) AS unitPriceNumSELECT sum(unitPriceNum)FACET itemIdWHERE unitPriceNum < 100SELECT ... WITH TIMEZONE (selected zone)...Use the WITH TIMEZONE clause to select a time zone for a date or time in the query that hasn't already had a time zone specified for it.

If you include the WITH TIMEZONE clause without specifying a time zone in a date time, the since and until clauses keep in the provided time zone.

If you don't include the WITH TIMEZONE clause, but you include a time zone in a date time string, your date time string time zone keeps.

重要

The default time zone is always UTC if one is not specified. The raw timestamp values (as seen in the JSON view) in the results are always UTC. The UI displays the results in the time zone you've specified in your account settings.

A time zone in a time stamp string always works. It supersedes the WITH TIMEZONE zone.

For example, the query clause SINCE Monday UNTIL Tuesday WITH TIMEZONE 'America/New_York' returns data recorded from Monday at midnight, America/New York time, until midnight Tuesday, America/New York time.

Here are some examples of query timespan clauses:

No time zone in date time string using the

WITH TIMEZONEclause:SINCE today UNTIL '2022-05-19T12:00' WITH TIMEZONE 'America/Los_Angeles'This resolves as

"beginTime": "2022-05-19T07:00:00Z"and"endTime": "2022-05-19T19:00:00Z".Time zone in date time string, not using the

WITH TIMEZONEclause:SINCE today UNTIL '2022-05-19T12:00-0500'This resolves as

"beginTime": "2022-05-19T00:00:00Z"and"endTime": "2022-05-19T17:00:00Z".Time zone in date time string, using the

WITH TIMEZONEclause America/Los Angeles, which is -0700 during daylight saving time:SINCE today UNTIL '2022-05-19T12:00-0500' WITH TIMEZONE 'America/Los_Angeles'This resolves as

"beginTime": "2022-05-19T07:00:00Z"and"endTime": "2022-05-19T19:00:00Z".

See the available Zone IDs list.

See Set time range on dashboards and charts for detailed information and examples.

Query metric data

Metric data is more complex than other types of data. There are specific tips for querying it well. We have two types of metric data, each with their own query guidelines:

- Query dimensional metrics, which are reported by our Metric API and by some of our solutions that use that API (for example, our Dropwizard integration or Micrometer integration).

- Query metric timeslice data, which is our original metric data type reported by our APM, mobile monitoring, and .

For more details about how we report metric data, see Metric data types.

Functions

In this section we explain NRQL functions, both aggregator functions and non-aggregator functions.

Aggregator functions

You can use aggregator functions to filter and aggregate data. Some tips for using these:

- If you're using an aggregator function multiple times in the same query (for example,

SELECT median(one_metric), median(another_metric)), it can cause problems in displaying results. To solve this, use theASfunction. For example:SELECT median(one_metric) AS 'med-a', median(another_metric) AS 'med-b' - Data type "coercion" is not supported. Read about available type conversion functions.

- For how to display results over time, see Group results over time.

Examples:

SELECT histogram(duration, 10, 20) FROM PageView SINCE 1 week agoUse the aggregationendtime() function to return the time of the relevant aggregation. More specifically, for a given aggregate, the aggregationendtime() function provides the timestamp of the end of the time period of that aggregation. For example, in a time series query, for a data point that encompasses an hour’s worth of data, the function would return the timestamp of the end of that hour period.

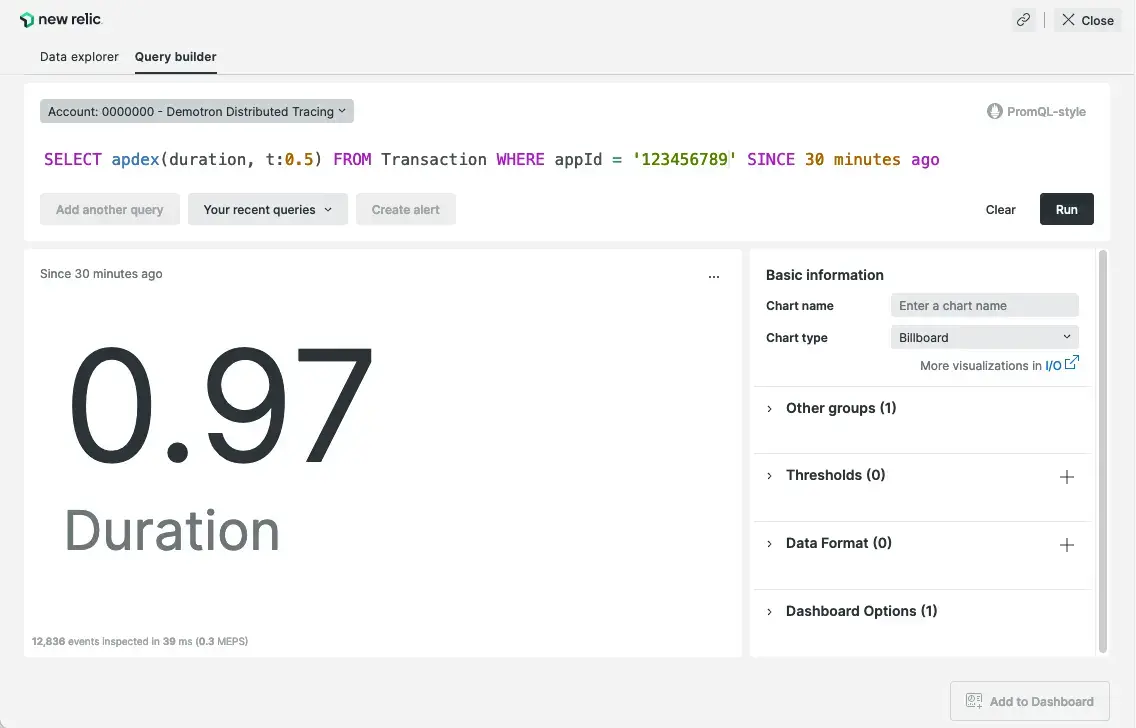

Use the apdex function to return an Apdex score for a single transaction or for all your transactions. The default Apdex score is 0.5 seconds. The attribute can be any attribute based on response time, such as duration or backendDuration. The t: argument defines an Apdex T threshold in the same unit of time as the chosen attribute. For instance, if the attribute is measured in seconds, t will be a threshold in seconds.

The Apdex score returned by the apdex() function is based only on execution time. It does not account for APM errors. If a transaction includes an error but completes in Apdex T or less, that transaction will be rated satisfying by the apdex() function.

If you have defined custom attributes, you can filter based on those attributes. For example, you could monitor the Apdex for a particularly important customer:

SELECT apdex(duration, t: 0.4) FROM TransactionWHERE customerName = 'ReallyImportantCustomer' SINCE 1 day agoUse the name attribute to return a score for a specific transaction, or return an overall Apdex by omitting name. This query returns an Apdex score for the Controller/notes/index transaction over the last hour:

The apdex function returns an Apdex score that measures user satisfaction with your site. Arguments are a response time attribute and an Apdex T threshold in seconds.

SELECT apdex(duration, t: 0.5) from TransactionWHERE name = 'Controller/notes/index' SINCE 1 hour agoThis example query returns an overall Apdex for the application over the last three weeks:

SELECT apdex(duration, t: 0.08) FROM Transaction SINCE 3 week agoUse the average() function to return the mean average value for an attribute. It takes a single attribute name as an argument. If a value of the attribute is not numeric, it will be ignored when aggregating. If data matching the query's conditions is not found, or there are no numeric values returned by the query, it will return a value of null.

The bucketPercentile() function is the NRQL equivalent of the histogram_quantile function in Prometheus. It is intended to be used with dimensional metric data. Instead of the quantile, New Relic returns the percentile, which is the quantile * 100.

Use the bucketPercentile() function to calculate the quantile from the histogram data in a Prometheus format.

It takes the bucket name as an argument and reports percentiles along the bucket's boundaries:

SELECT bucketPercentile(duration_bucket) FROM Metric SINCE 1 day agoOptionally, you can add percentile specifications as an argument:

SELECT bucketPercentile(duration_bucket, 50, 75, 90) FROM Metric SINCE 1 day agoBecause multiple metrics are used to make up Prometheus histogram data, you must query for specific Prometheus metrics in terms of the associated <basename>.

For example, to compute percentiles from a Prometheus histogram, with the <basename> prometheus_http_request_duration_seconds using NRQL, use bucketPercentile(prometheus_http_request_duration_seconds_bucket, 50). Note how _bucket is added to the end of the <basename> as a suffix.

See the Prometheus.io documentation for more information.

Use the cardinality() function to obtain the number of combinations of all the dimensions (attributes) on a metric.

It takes three arguments, all optional:

- Metric name: if present,

cardinality()only computes the metric specified. - Include: if present, the include list restricts the cardinality computation to those attributes.

- Exclude: if present, the exclude list causes those attributes to be ignored in the cardinality computation.

cdfPercentage() is an implementation of the cumulative distribution function, returning percentages of attribute values whose value is less than or equal to one or more thresholds.

cdfPercentage() aggregates on its attribute argument, which can be either a numeric attribute or a distribution metric attribute. Mixed types in one query are accepted. Other types (such as string) are ignored. Up to 10 thresholds can be specified.

This query returns the percentage of events where firstPaint is less than or equal to 0.5 seconds, and the percentage of events where firstPaint is less than or equal to 1 second.

FROM PageView SELECT cdfPercentage(firstPaint, 0.5, 1.0)Use the count() function to return a count of available records. It takes a single argument; either *, an attribute, or a constant value. Currently, it follows typical SQL behavior and counts all records that have values for its argument.

Since count(*) does not name a specific attribute, the results will be formatted in the default "humanize" format.

derivative() finds the rate of change for a given dataset. The rate of change is calculated using a linear least-squares regression to approximate the derivative. Since this calculation requires comparing more than one datapoint, if only one datapoint is included in the evaluation range, the calculation is indeterminate and won't work, resulting in a null value.

The time interval is the period for which the rate of change is calculated. For example, derivative(attributeName, 1 minute) will return the rate of change per minute.

Use the earliest() function to return the earliest value for an attribute over the specified time range.

It takes a single argument.

If used in conjunction with a FACET it will return the earliest value for an attribute for each of the resulting facets.

ヒント

If multiple events or metrics share the same earliest timestamp, the returned result is random and may vary across different query runs. To achieve more consistent results, facet by an attribute with different values for these events or metrics.

This query returns the earliest country code per each user agent from the PageView event.

SELECT earliest(countryCode) FROM PageView FACET userAgentNameUse the filter() function to limit the results for one of the aggregator functions in your SELECT statement. You can use filter() in conjunction with FACET or TIMESERIES. Filter is only useful when selecting multiple different aggregations such as:

SELECT filter(sum(x), WHERE attribute = 'a') AS 'A', filter(sum(x), WHERE attribute = 'b') AS 'B' ...Otherwise, it's better to just use the standard WHERE clause.

Use the funnel() function to generate a funnel chart. It takes an attribute as its first argument. You then specify steps as WHERE clauses (with optional AS clauses for labels) separated by commas.

For details and examples, see the funnels documentation.

Use the histogram() function to generate histograms, which are useful for

visualizing the distribution of a dataset. It divides the dataset into a

specified number of buckets and counts the number of data points that fall

into each bucket.

Arguments:

attributeThe first argument is required and specifies the attribute for which to count values that fall within each histogram bucket range.width:Indicates the width of the sample range. The maximum value of the range is thestartargument value plus thiswidthvalue.- When using positional (non-labeled) arguments,

widthis the second argument. - Default:

10

- When using positional (non-labeled) arguments,

buckets:Total number of buckets (between1and500, inclusive).- When using positional (non-labeled) arguments,

bucketsis the third argument. - Default:

40

- When using positional (non-labeled) arguments,

start:The beginning of the histogram range.- When using positional (non-labeled) arguments,

startis the fourth argument. - Default:

0

Note

Values that fall outside the defined histogram range are included in the first or last buckets. The first bucket count will include items that are smaller than the histogram range, and the last bucket count will include items that are larger than the histogram range. To exclude these values from the histogram results, include a filter in the query's where clause. (Example:

WHERE attribute >= [start] AND attribute <= [start + width])This query results in a histogram of response times ranging up to 10 seconds over 40 buckets. This means each bucket covers a 0.25 second range of values. (10 / 40 = 0.25). Any duration values larger than 10 seconds are included in the last bucket. If duration could be less than zero, those values would be included in the first bucket.

SELECT histogram(duration) FROM PageView SINCE 1 week agoThese equivalent queries result in a histogram of response times ranging up to 5 seconds over 10 buckets.

SELECT histogram(duration, 5, 10) FROM PageView SINCE 1 week agoSELECT histogram(duration, width: 5, buckets: 10) FROM PageView SINCE 1 week agoThese equivalent queries result in a histogram of response times ranging from 1 to 4 seconds over 3 buckets.

Here is a breakdown of the buckets:

Bucket 1

Bucket 2

Bucket 3

Bucket range

1 to 2

2 to 3

3 to 4

Values counted

< 2

≥ 2 and < 3

≥ 3

SELECT histogram(duration, 3, 3, 1)FROM PageView SINCE 1 week agoSELECT histogram(duration, width: 3, buckets: 3, start: 1)FROM PageView SINCE 1 week agohistogram()accepts Prometheus histogram buckets:SELECT histogram(duration_bucket, 10, 20)FROM Metric SINCE 1 week agohistogram()accepts Distribution metric as an input:SELECT histogram(myDistributionMetric, 10, 20)FROM Metric SINCE 1 week agoUse

histogram()with aFACETclause to generate a heatmap chart:SELECT histogram(duration)FROM PageView FACET appName SINCE 1 week ago- When using positional (non-labeled) arguments,

Using keyset() will allow you to see all of the attributes for a given data type over a given time range. It takes no arguments. It returns a JSON structure containing groups of string-typed keys, numeric-typed keys, boolean-typed keys, and all keys.

This query returns the attributes found for PageView events from the last day:

SELECT keyset() FROM PageView SINCE 1 day agoUse the latest() function to return the most recent value for an attribute over a specified time range.

It takes a single argument.

If used in conjunction with a FACET it will return the most recent value for an attribute for each of the resulting facets.

ヒント

If multiple events or metrics have the same latest timestamp, the returned result is random and may vary across query runs. To get more consistent results, facet by an attribute with different values for these events or metrics.

This query returns the most recent country code per each user agent from the PageView event.

SELECT latest(countryCode) FROM PageView FACET userAgentNameUse the latestrate() function to return the rate of change of a value based on the last 2 data points. It takes the attribute in question as the first argument and the unit of time for the resulting rate as the second argument. The function returns a result in units of change in attribute/time interval.

This function can be useful to provide the most recent rate of change for an attribute in order to see leading-edge trends.

This query returns the rate of change of duration based on the last 2 data points. It will be returned in units of duration/second because of the 1 SECOND argument.

SELECT latestrate(duration, 1 SECOND) FROM PageViewUse the max() function to return the maximum recorded value of a numeric attribute over the time range specified. It takes a single attribute name as an argument. If a value of the attribute is not numeric, it will be ignored when aggregating. If data matching the query's conditions is not found, or there are no numeric values returned by the query, it will return a value of null.

Use the median() function to return an attribute's median, or 50th percentile. For more information about percentile queries, see percentile().

This query will generate a line chart for the median value.

SELECT median(duration) FROM PageView TIMESERIES AUTOMedian in a JOIN clause:

Because median is simply a shortcut for

percentile(attribute, 50), amedian()result from a joined subquery is a compound data type, which maps the 50th percentile to its calculated value.To reference the actual median value in the outer query, you can use the

getField()function. Note, the mapped key is a string representation of a double value, so formedian()it is'50.0'.

Use the min() function to return the minimum recorded value of a numeric attribute over the time range specified. It takes a single attribute name as an argument. If a value of the attribute is not numeric, it will be ignored when aggregating. If data matching the query's conditions is not found, or there are no numeric values returned by the query, it will return a value of null.

Use the percentage() function to return the percentage of a target data set that matches some condition.

This function expects exactly two arguments (arguments after the first two are ignored). The first argument requires an aggregator function against the desired attribute. If the attribute is not numeric, this function returns a value of 100%. The second argument requires a WHERE clause.

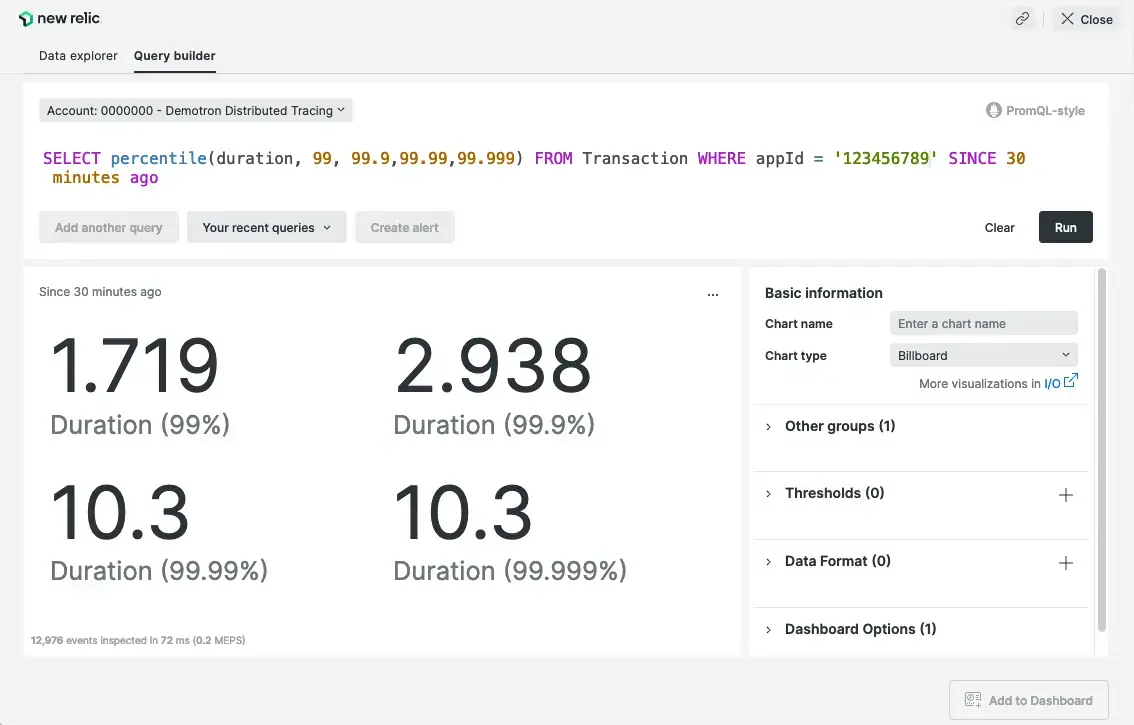

FROM Transaction SELECT percentage(count(*), WHERE error is true ) AS 'Error Percent' WHERE host LIKE '%west%' EXTRAPOLATEUse the percentile() function to return an attribute's approximate value at a given percentile. It requires an attribute and can take any number of arguments representing percentile points. The percentile() function enables percentiles to displays with up to three digits after the decimal point, providing greater precision. Percentile thresholds may be specified as decimal values, but be aware that for most data sets, percentiles closer than 0.1 from each other will not be resolved.

Percentile display examples

Use TIMESERIES to generate a line chart with percentiles mapped over time.

Omit

TIMESERIESto generate a billboard and attribute sheet showing aggregate values for the percentiles.If no percentiles are listed, the default is the 95th percentile. To return only the 50th percentile value, the median, you can also use

median().This query will generate a line chart with lines for the 5th, 50th, and 95th percentile.

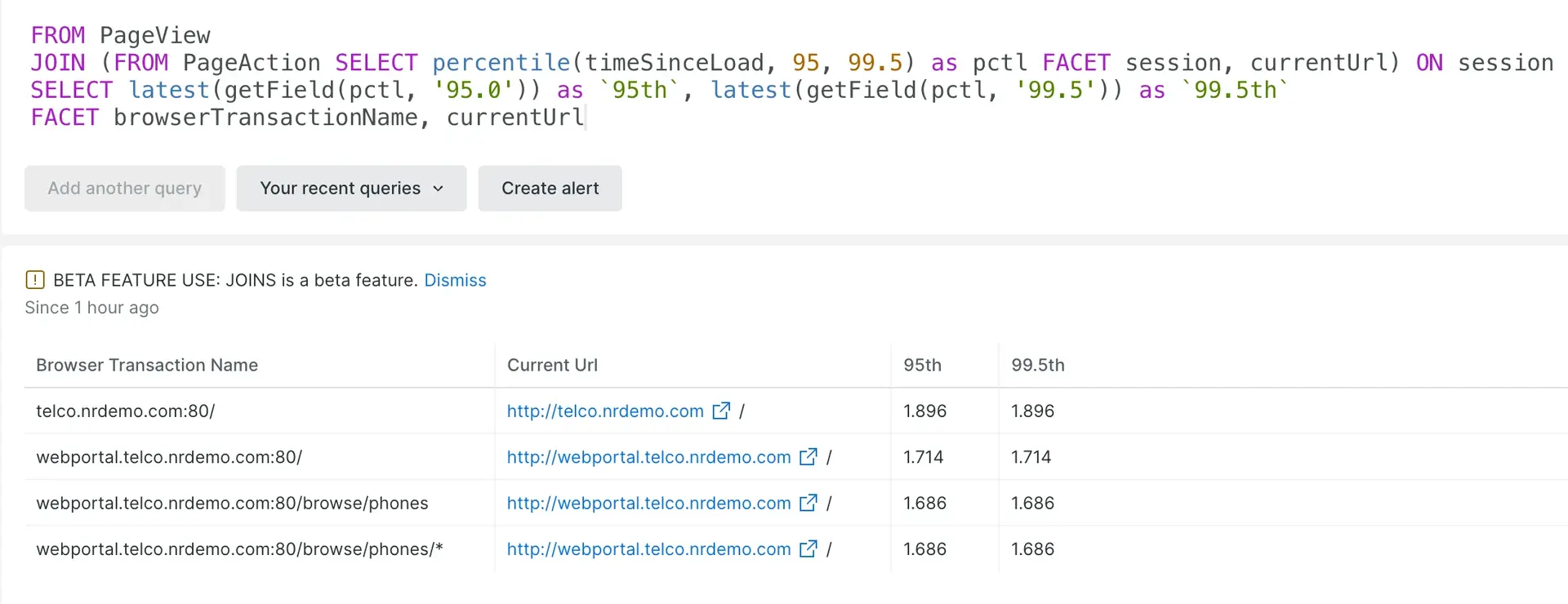

SELECT percentile(duration, 5, 50, 95) FROM PageView TIMESERIES AUTOPercentile in a

JOINclause:When using percentiles in a joined subquery, please note the results from the subquery are a compound data type, which maps each percentile to its calculated value.

To reference any of the individual percentile values in the outer query, you can use the

getField()function. Note, the mapped key is a string representation of a double value, so you need to add.0to integers. For example, the key for the 95th percentile is'95.0'.

predictLinear() is an extension of the derivative() function. It uses a similar method of least-squares linear regression to predict the future values for a dataset.

- The

time intervalis how far the query will look into the future. For example,predictLinear(attributeName, 1 hour)is a linear prediction 1 hour into the future of the query time window. - Generally,

predictLinear()is helpful for continuously growing values like disk space, or predictions on large trends. - Since

predictLinear()is a linear regression, familiarity with the dataset being queried helps to ensure accurate long-term predictions. - Any dataset which grows exponentially, logarithmically, or by other nonlinear means will likely only be successful in very short-term predictions.

- New Relic recommends against using

predictLinearinTIMESERIESqueries. This is because each bucket will be making an individual prediction based on its relative timeframe within the query, meaning that such queries will not show predictions from the end of the time series forward.

Use the rate() function to visualize the frequency or rate of a given query per time interval. For example, you might want to know the number of pageviews per minute over an hour-long period or the count of unique sessions on your site per hour over a day-long period.

Use

TIMESERIESto generate a line chart with rates mapped over time.Omit

TIMESERIESto generate a billboard showing a single rate value averaged over time.Here's a basic query that will generate a line chart showing the rate of throughput for APM transactions per 10 minutes over the past 6 hours:

SELECT rate(count(*), 10 minute) FROM TransactionSINCE 6 hours ago TIMESERIESHere's a short video (3:21 minutes) explaining how to use

rateto compare data across different time windows:

Use the stdvar() function to return the standard variance for a numeric attribute over the time range specified.

It takes a single argument. If the attribute is not numeric, it will return a value of zero.

Use the sum() function to return the sum recorded values of a numeric attribute over the time range specified.

It takes a single argument. If the attribute is not numeric, it will return a value of zero.

Use the uniqueCount() function to get the number of unique values recorded for an attribute over a specified time range. To count the unique combinations of multiple attribute values, specify those attributes with the function. You can include up to 32 attributes. This function provides an exact result for up to 256 unique values when you call it without the precision argument. For more than 256 unique values, the result is approximate. You can specify a precision value within the range of 256 to 50,000 to increase the threshold for exact results. When unique values exceed the set threshold, the function uses the HyperLogLog probabilistic data structure to provide an approximate result.

Note

How exact counting works under 256 values: When counting fewer than 256 unique string values, uniqueCount() uses a hash-based counting method to determine uniqueness. This method generates a 32-bit integer hash code from the characters in each string. While this approach efficiently distributes hash values for most strings, hash collisions can occasionally occur—meaning two different strings may generate the same hash code. This is more likely to happen when counting strings that are extremely similar to each other. In rare cases where a hash collision occurs, the function may slightly undercount the true number of unique values.

Use the uniqueCount() function by specifying the attributes and optionally set the precision argument as follows:

uniqueCount(attribute, [, attribute [, ...]] [, precision: number])- Attribute(s): Specify an attribute for which you want to count unique values, or specify multiple attributes to count unique combinations of values. You can specify up to 32 attributes.

- Precision: Specify a number that sets the threshold for exact results. You can set a value up to 50,000.

Query example without a precision argument:

SELECT uniqueCount(accountId) FROM Transaction SINCE 1 day agoQuery example with a precision argument:

SELECT uniqueCount(appName, name, clusterName, precision: 1000) FROM Transaction SINCE 1 day agoUse the uniques() function to return a list of unique values recorded for an attribute over the time range specified. When used along with the facet clause, a list of unique attribute values will be returned per each facet value.

The limit parameter is optional. When it is not provided, the default limit of 1,000 unique attribute values per facet is applied. You may specify a different limit value, up to a maximum of 10,000. The uniques() function will return the first set of unique attribute values discovered, until the limit is reached. Therefore, if you have 5,000 unique attribute values in your data set, and the limit is set to 1,000, the operator will return the first 1,000 unique values that it discovers, regardless of their frequency.

The maximum number of values that can be returned in a query result is the product of the uniques() limit times the facet limit. In the following query, the theoretical maximum number of values that can be returned is 5 million (5,000 x 1,000).

Depending on the data set being queried, and the complexity of the query, memory protection limits may prevent a very large query from being executed.

From Transaction SELECT uniques(host,5000) FACET appName LIMIT 1000If you'd like to know the unique combinations of a handful of attributes, you can structure a query in the format SELECT uniques(tuple(x, y, ... z)) ... to get all the unique tuples of values, to maintain their relationship. In the following query, tuple is used on index and cellName together to find uniques where those two values occur in combination.

FROM NodeStatus SELECT uniques(tuple(index, cellName), 5)Non-aggregator functions

Use non-aggregator functions to return values for each data point within NRQL queries.

Use the accountId() function to return the account ID associated with queried data. This function takes no arguments. Here are some example queries:

This query returns the account ID associated with each Transaction event returned:

SELECT accountId() FROM Transaction SINCE 1 day agoThis query returns the number of Transaction events in the last day that are associated with each account ID:

SELECT count(*) FROM Transaction FACET accountId() SINCE 1 day agoThis query returns the number of Transaction events in the last day where the account ID is specifically one of 1, 2, or 3:

SELECT count(*) FROM Transaction WHERE accountId() IN (1,2,3) SINCE 1 day agoUse the anchor parse function, aparse() to extract specific values from a string. This is an alternative to capture().

aparse() takes two arguments:

A string attribute

A pattern string with anchor strings and extract characters. For example, you could use

www.*.comto extract the domain from a URL.When using

aparse(), the pattern string should contain anchors, likewww.and.comabove, to identify the location of the intended extracted string, noted by*.aparse()uses the following characters in pattern strings:%: Non-capturing wildcard, as you'd see in theLIKEclause*: Capturing wildcard, similar to using regex captureIn practice, the anchor strings often occur in the middle of a string attribute, and not at the beginning or end.

In this case, use the

%wildcard to ignore unwanted values: for example,%www.*.com%.Like

capture(), all results fromaparse()are strings. To use these results in math functions they must cast with thenumeric()function.Note:

aparse()is case-insensitive.FROM PageViewSELECT aparse(browserTransactionName, 'website.com/*')To extract a value from the middle of a string, use the non-capturing wildcard,

%, at the beginning and end of the pattern string. For example:FROM LogSELECT count(*)FACET aparse(string, '%"itemId":"*"%')When extracting multiple values as variables, note that the order matters. For example:

FROM LogWITH aparse(string, 'POST: * body: {"itemId":"*","unitPrice":*}\n') AS (url, itemId, unitPrice)SELECT url, itemId, unitPriceFor more on variables, see NRQL variables.

Use the blob() function on a blob type attribute to return a base-64 encoded string of that attribute.

This function has the following restrictions:

Queries containing calls to

blob()have a maxLIMITvalue of 20blob()cannot be called in theWHEREclause of a queryblob()cannot be used in faceted queries or time series queriesFor more information about how this is used in Logging, see Find data in long logs (blobs).

To decode a base-64 encoded blob, see the

decode()function.SELECT message, blob(`newrelic.ext.message`)FROM Log WHERE newrelic.ext.message IS NOT NULL

Use the buckets() function to aggregate data split up by a FACET clause into buckets based on ranges. You can bucket by any attribute that is stored as a numerical value in the New Relic database.

It takes three arguments:

Attribute name

Maximum value of the sample range (any outliers will appear in the final bucket)

Total number of buckets

For more information and examples, see Split your data into buckets.

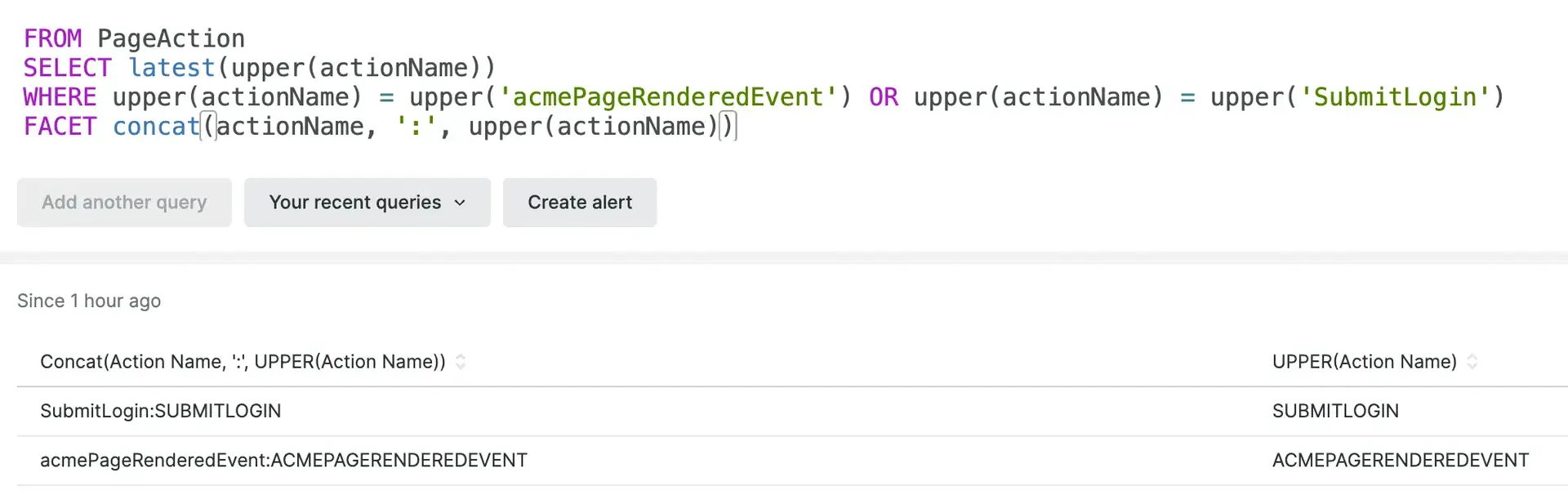

Use the concat() function to return the string resulting from concatenating its arguments.

Up to 20 arguments of numeric, boolean, tuple, or array types may be provided. Null arguments and arguments of unsupported types are ignored. If no arguments are provided, the result is the empty string.

The optional precision argument may be provided in order to limit the number of decimal places included when concatenating floating-point numeric values.

The resulting string may have a maximum length of 4096 characters.

This query returns backend and network durations from the PageView event, formatted with two decimal places and labels, as a single string.

FROM PageView SELECT concat('Backend Duration: ', backendDuration, ', Network Duration: ', networkDuration, precision: 2)This would return responses in a format like:

Backend Duration: 0.69, Network Duration: 0

This query returns the average connection setup duration from the PageView event, faceted by a string composed of the user's city, region, and country.

FROM PageView SELECT average(connectionSetupDuration) FACET concat(city, ', ', regionCode, ' ', countryCode) WHERE countryCode IN ('US', 'CA')Use the convert() function to perform unit conversion between the provided units on the given input value.

Common units and abbreviations for time, length, weight, volume, and data are supported using the UCUM standards to align with OpenTelemetry specifications. For convenience, the standardized abbreviations are augmented by some natural language alternatives like ft in addition to ft_us, kilobytes, and µs.

The units are case sensitive. All units are lowercase, unless their specification requires them to be uppercase. For example, the data units 'bits' is valid for bits and 'By' must have a capital B for bytes.

The largest unit of time is the Julian year, which is always 365.25 days.

FROM Transaction SELECT convert(duration, 'ms', 'min') AS durationMinFROM Product SELECT convert(sum(itemWeight), 'grams', 'lbs')This query assumes you have the unit information stored as a string attribute in the event itself, and that you'd like to standardized the values to seconds.

FROM Metric SELECT average(convert(apm.mobile.external.duration, unit, 's')) WHERE appName = 'my-application'Use capture() to extract values from an attribute using a regular expression with RE2 syntax.

It takes two arguments:

Attribute name

Regular expression with capture syntax (regex expressions in NRQL use Python-like syntax,

r'...')When capturing, use the RE2 named-capture syntax

...(?P<name> pattern )...to capture the contained pattern, given the specified name.Multiple values can be captured by specifying additional capture groups in a regular expression. For example:

...(?P<name1> pattern1)...(?P<name2> pattern2)...Note: When capturing multiple values, each capture statement can have up to 16 capture groups, and each NRQL query can have up to 5 capture statements.

Read how to use regex capture to improve your query results.

ヒント

The regular expression must match its entire input. If a capture expression is not extracting the expected results, check whether it needs

.*at the beginning or end, which is the pattern for a partial match regex. However, the partial match regex may cause a slower query execution.Here's a short video (3:05 minutes) showing how to use

capture()to improve dashboard readability:For more information, see the examples below:

The following will select the domain name of the website, removing

https://and any paths following the.comSELECT capture(pageUrl, r'https://(?P<baseUrl>.*.com)/.+')FROM PageView SINCE 1 day agoThe following will capture only the first word of the error message.

SELECT capture(errorMessage, r'(?P<firstWord>\S+)\s.+')FROM TransactionWHERE errorMessage IS NOT NULLSINCE 1 hour agoThe following will facet by the captured HTTP method.

SELECT count(*) FROM LogWHERE message LIKE '%HTTP%'FACET capture(message, r'.* "(?P<httpMethod>[A-Z]+) .*')The following will filter the results based on Log events with

messageattribute that matches the regular expression where the captured job name isExampleJob.SELECT message FROM LogWHERE capture(message, r'.*Job Failed: (?P<jobName>[A-Za-z]+),.*') = 'ExampleJob'SINCE 10 minutes agoThe following will capture sum of CPU Time from log lines. You must explicitly cast to numeric to do mathematical operations.

SELECT sum(numeric(capture(message, r'.*CpuTime:\s(?P<cpuTime>\d+)')))FROM LogWHERE message LIKE '%CpuTime:%' SINCE 1 hour agoIn this example NRQL Variables are used to store multiple captured values from a log message.

FROM LogWITH capture(message, r'POST to carts: (?P<URL>.*) body: {"itemId":"(?P<UUID>.*)","unitPrice":(?P<unitPrice>.*)}.*')AS (URL, UUID, unitPrice)SELECT URL, UUID, unitPriceWHERE URL IS NOT NULLSee more on NRQL Variables here.

Use decode() to perform base-64 conversions on strings and blobs. The input value (the first argument) will be decoded using the base-64 standard specified by the encoding (the second argument).

The following string values are supported encoding parameters:

'base64': Uses the RFC4648 base-64 standard

'base64mime': Uses the RFC2045 base-64 standard (MIME)

'base64url': Uses the RFC4648 base-64 standard with URL and filename safe alphabet

As

blob()is not allowed inWHEREorFACETclauses,decode()with blob types is not supported in theWHEREclause or for faceted queries.To encode strings, see the

encode()function.FROM Span SELECT entity.guid, decode(entity.guid, 'base64')WHERE entity.guid IS NOT NULLFROM Span SELECT count(*)WHERE entity.guid IS NOT NULLFACET entity.guid, decode(entity.guid, 'base64')FROM Span SELECT count(*)WHERE entity.guid IS NOT NULLAND decode(entity.guid, 'base64') NOT LIKE '%APM%'FROM LogWITH blob(`newrelic.ext.message`) AS encodedBlob,decode(encodedBlob, 'base64') AS decodedBlobSELECT encodedBlob, decodedBlobWHERE newrelic.ext.message IS NOT NULLLIMIT 10

Use the dimensions() function to return all the dimensional values on a data type.

You can explicitly include or exclude specific attributes using the optional arguments:

include: if present, the include list limitsdimensions()to those attributes.exclude: if present, thedimensions()calculation ignores those attributes.FROM Metric SELECT count(node_filesystem_size)TIMESERIES FACET dimensions()When used with a

FACETclause,dimensions()produces a unique time series for all facets available on the event type, similar to how Prometheus behaves with non-aggregated queries.

Use encode() to perform base-64 conversions on strings. The input value (the first argument) will be encoded using the base-64 standard specified by the encoding (the second argument).

The following string values are supported encoding parameters:

'base64': Uses the RFC4648 base-64 standard

'base64mime': Uses the RFC2045 base-64 standard (MIME)

'base64url': Uses the RFC4648 base-64 standard with URL and filename safe alphabet

To decode strings or blobs, see the

decode()function.encode()is not supported for blobs.FROM PageView SELECT session, encode(session, 'base64')

Use the cidrAddress() function to obtain the base network address from a CIDR IP address.

cidrAddress() takes the following arguments:

attribute- A string value that contains either an IP address on its own or with a prefix length in CIDR notation.- This can be a string attribute or a string literal in quotes.

- The IP address must be an IPv4 address.

number- An integer value which represents the prefix length.- This can be an integer attribute or an integer value.

- If the attribute parameter is in CIDR notation this parameter is optional and takes precedence over the prefix length provided in the CIDR String.

cidrFormat- An optional boolean value that is used to determine if the network address output should be formatted in CIDR notation. This will default to true.The

cidrAddress()function will return a value as long as the attribute and number parameters contain a valid IP address and prefix length. If the parameter input is invalid,cidrAddress()will returnnull.The following query returns the subnets which are processing the most requests from the SyntheticRequest event type.

FROM SyntheticRequest SELECT count(*) FACET cidrAddress(serverIPAddress, 24)This would return responses in a format like:

Cidr Address of Server IPAddress

Count

10.0.0.0/24

6k

10.10.1.0/24

4k

10.0.14.0/24

1k

This query returns all IP addresses in the

serverIPAddressattribute that exist within the CIDR range of 10.0.0.0 to 10.0.0.255.FROM SyntheticRequest SELECT uniques(serverIPAddress)WHERE cidrAddress(serverIPAddress, 24) = '10.0.0.0/24'This query returns a count of all records while excluding records which contain an

serverIPAddressvalue that falls into the CIDR range of 10.0.0.0/24 or 10.10.1.0/24.FROM SyntheticRequest SELECT count(*)WHERE cidrAddress(serverIPAddress, 24) NOT IN ('10.0.0.0/24', '10.10.1.0/24')

...WHERE eventType() = 'EventNameHere'......FACET eventType()...Use the eventType() function in a FACET clause to break out results by the selected data type or in a WHERE clause to filter results to a specific data type. This is particularly useful for targeting specific data types with the filter() and percentage() functions.

重要

In this context, "event type" refers to the types of data you can access with a NRQL query.

This query returns the percentage of total TransactionError results out of the total Transaction results. You can use the eventType() function to target specific types of data with the filter() function.

SELECT 100 * filter(count(*), WHERE eventType() = 'TransactionError') / filter(count(*), WHERE eventType() = 'Transaction') FROM Transaction, TransactionError WHERE appName = 'App.Prod' TIMESERIES 2 Minutes SINCE 6 hours agoThis query displays a count of how many records each data type (Transaction and TransactionError) returns.

SELECT count(*) FROM Transaction, TransactionError FACET eventType() TIMESERIESUse the getField() function to extract an element from an array or a field from compound data types, such as dimensional metric data.

getField() takes the following arguments:

attribute- An array or a compound data type.field- The index of the array element or the compound data type's field name.

You can also use square brackets [ ] as a shorthand for getField().

重要

Array indexing starts with 0.

Examples

Consider the array [100, 110, 90, 100, 105] stored in the durations attribute.

The below query will return 90:

SELECT getField(durations, 2) FROM FooThe following query using the getField() shorthand notation will also return 90:

SELECT durations[2] FROM FooThe supported compound data types and their fields are:

Metric type | Supported fields |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

Examples

SELECT max(getField(mySummary, count)) FROM MetricSELECT sum(mySummary) FROM Metric where getField(mySummary, count) > 10Query using the shorthand notation for getField():

SELECT max(mySummary[count]) FROM MetricgetCdfCount() is an implementation of the cumulative distribution function, returning the number of values in attribute at or below threshold.

Only one threshold is allowed. Attribute may be either a numeric attribute or a distribution metric attribute. Mixed types in one query are accepted.

For a numeric type, it returns 1 if the attribute is less than or equal to threshold, otherwise it returns 0. For a distribution, it returns the count in the dataset represented by the distribution. For all other types, it returns 0.

This query returns the number of events where firstPaint is less than or equal to 1 second.

FROM PageView SELECT sum(getCdfCount(firstPaint, 1.0))Use if() to perform if-then-else control flow operations throughout a query.

if() takes 3 arguments:

condition- an expression that can evaluate totrueorfalsetrueValue- this value is returned if boolean expression istruefalseValue- this optional value is returned if boolean expression isfalse, or if not providedNULL, is returnedFROM LogSELECT count(*)FACET if(level_name = 'ERROR', 'ERROR', 'NOT_ERROR')FROM LogSELECT count(*)FACET if(level_name = 'INFO' OR level_name = 'WARNING', 'NOT_ERROR', 'ERROR')Use a nested

if()function to add additional conditional logic.FROM Transaction SELECT count(*)FACET if(appName LIKE '%java%', 'Java',if(appName LIKE '%kafka%', 'Kafka', 'Other'))

Use the JSON parse function, jparse(), to parse a string value and produce a map/list of values (or nested structures) which can be handled like any other first-class value type in NRQL.

jparse() takes two arguments:

attribute- A JSON string value.path- An optional string value that is used to directly reference a particular piece of the JSON within theattributeparameter. See the JSON Parse Path Syntax Reference section below.The

jparse()function follows the RFC 8259 format to parse JSON values. When thejparse()function is used without thepathparameter, it will return the deserialized JSON value.You can use square brackets to pull out individual values from a

jparse()result via a key/index, and map JSON keys directly to attributes using theWITHclause.Referencing a key

The following query references the key

userNameswithin thejsonStringattribute and will return['abc', 'xyz'].WITH '{"userNames": ["abc", "xyz"]}' AS jsonString SELECT jparse(jsonString)[userNames]Referencing an index

The following query references index

0within thejsonStringattribute and will return'abc'.WITH '["abc", "xyz"]' AS jsonString SELECT jparse(jsonString)[0]The following query uses

jparse()in theWITHclause to map the JSON keysuserNameandidinto NRQL variables so they can be used in the rest of the query.WITH '{"userName": "test", "unused": null, "id": 100}' AS jsonString, jparse(jsonString) AS (userName, id) SELECT userName, idTo parse specific values from the JSON string, you can use the

pathparameter.It's common for JSON data to be nested in several layers in non-trivial shapes. The path syntax allows you to directly reference a particular piece of the JSON data.

Example data:

{"valueA": "test","valueB": {"nestedValue1": [1, 2, 3],"nestedValue2": 100},"valueC": [{ "id": 1, "label": "A", "other": 7 },{ "id": 2, "label": "B", "other": 9 },{ "id": 3, "label": "C", "other": 13 }]}Path syntax examples using the data above:

Path Syntax Example

Result Description

Result

valueAReturns value at key

"test"["valueA"]Returns value at key

"test"[valueA, valueC]Returns list of key values

["test", [{"id": 1…}, {"id": 2…}], {"id": 3…}]]valueB.nestedValue2Returns value at key

100valueC[0]Returns the list value at index

0{"id": 1…}valueC[0,2]Returns the list values at index

0and2[{"id": 1…}, {"id": 3…}]valueC[0:2]Returns the range of list values from the first index to second, excluding the value at the second index. In this case, the list values at index

0and1are returned.[{"id": 1…}, {"id": 2…}]valueC[:2]Returns the range of list values from the beginning to the second index, excluding the value at the second index. In this case, the list values at index

0and1are returned.[{"id": 1…}, {"id": 2…}]valueC[:-2]Returns all list values except the last n, where n is a negative number after the colon(i.e

[:-n]). In this case, the list value at index0will be returned.[{"id": 1…}]valueC[1:]Returns the range of list values from the index specified to the end of the list. In this case, the list values at index

1and2are returned.[{"id": 2…}, {"id": 3…}]valueC[-1:]Returns the last n list values, where n is a negative number before the colon(for example,

[-n:]). In this case the list value at index2will be returned.[{"id": 3…}]valueC[*]Returns all list values

[{"id": 1…}, {"id": 2…}, {"id": 3…}]valueC[*].idReturns the specified key value from all list members. In this case, the

idkey value.[1, 2, 3]valueC[*]["label", "other"]Returns the specified keys from all list members. In this case, the

labelandotherkey values.[["A", 7],…]Examples:

The following query parses the JSON string within the

jsonStringattribute.WITH '{"user": {"name": "John", "id": 5}}' AS jsonString SELECT jparse(jsonString)This query will return the deserialized JSON string:

{ "user": { "name": "John", "id": 5 } }A common problem is having rich and structured data hiding within a log message. You can leverage

aparse()andjparse()to trim away noise and find specific values.The following query:

Calls

aparse()to extract JSON data from the thelogMessageattributeParses the

user.namefield from the extracted JSON data usingjparse()and theuser.namepath parameter.WITH '1693242121842: value=\'{"user": {"name": "John", "id": 5}}\', useless=stuff' AS logMessage, aparse(logMessage, '%: value=\'*\'%') AS jsonString SELECT jparse(jsonString, 'user.name')

The following query parses each

idfield from the list of objects within thejsonStringattribute and outputs these values as an array.WITH '{"users": [{"name": "A", "id": 5}, {"name": "B", "id": 10}]}' AS jsonString, jparse(jsonString, 'users[*].id') AS ids SELECT idsThe above query will return

[5, 10].Related functions:

mapKeys(),mapValues()

Use the length() function to return the length of a string value or the number of elements in an array value.

It takes a single argument.

This query returns the length of each URL string from the PageView event.

SELECT length(pageUrl) FROM PageViewConsider the array ["US", "CA", "UK"] stored in the countries attribute.

SELECT length(countries) FROM Foolength(countries) in the above query will return 3.

Use the contains() function to check if an element exists within an array.

contains() takes the following arguments:

attribute- An arrayelement- The element to check the array for

Consider the array ["9999-1234-9999", "3333-7890-3333", "5555-3456-555"] stored in the guids attribute.

SELECT contains(guids, '5555-3456-555') FROM Foocontains(guids, '5555-3456-555') in the above query will return TRUE.

Consider the array ["9999-1234-9999", "3333-7890-3333", "5555-3456-555"] stored in the guids attribute within the Transaction event type.

SELECT count(*) FROM Transaction WHERE contains(guids, '9999-1234-9999')The above query will return a count of transactions which include the "9999-1234-9999" guid.

If you've uploaded a lookup table, you can use this function with a table name to access that table's data in a query. Here's an example query:

FROM LogSELECT count(*)WHERE hostname IN (FROM lookup(myHosts) SELECT uniques(myHost))For more information, see How to query lookup table data.

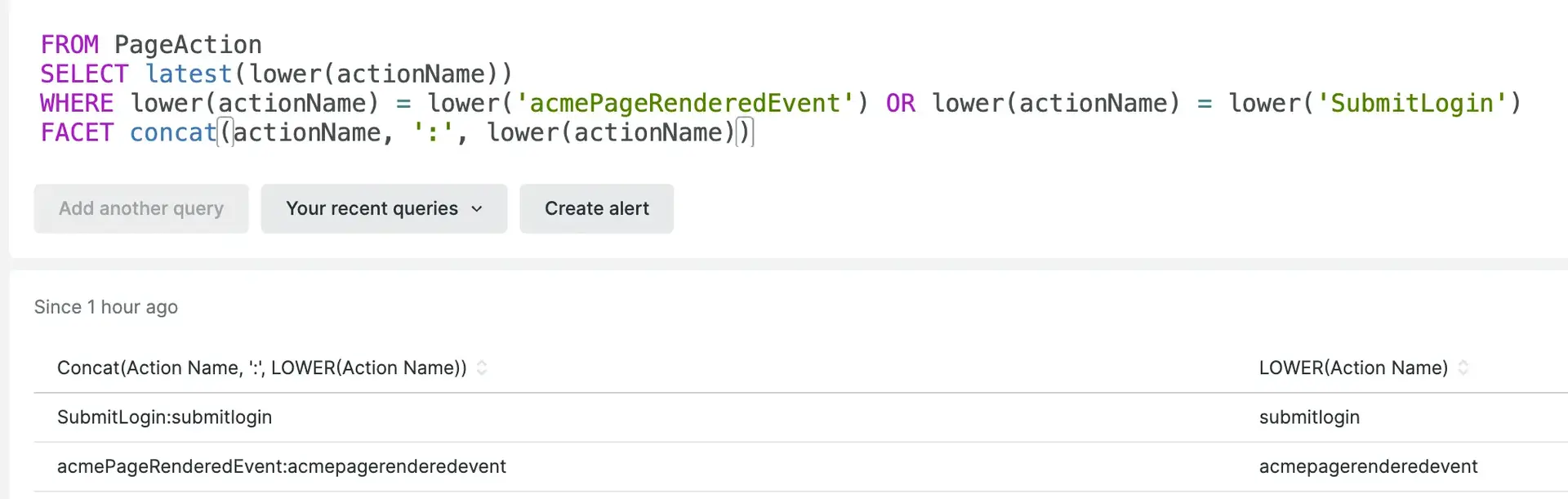

Use the lower() function to change all alphabetic characters of a string value to lower case.

Arguments:

str- The string value to be lower-cased- This can be anything that evaluates to a string, including a literal string in quotes, a queried string attribute, a function that returns a string, or even a subquery that returns a single string value.

- If this argument evaluates to null, the

lower()function will return null.

Related function:

upper()

Use the mapKeys() function to return a list of keys when provided a map as input within the attribute parameter.

WITH '{"userResult1": 100, "userResult2": 200, "userResult3": 4}' AS jsonString SELECT mapKeys(jparse(jsonString)) AS keysThe above query:

- Deserializes the JSON string within the

jsonStringattribute into a map using thejparse()function - Calls the

mapKeys()function to extract a list of all the keys within this map - Binds this list of keys to the

keysattribute

After running the above query, keys will contain the list ['userResult1', 'userResult2', 'userResult3'].

WITH '{"value1": "test", "value2": {"nestedValue1": [1, 2, 3], "nestedValue2": 100}}' AS jsonString SELECT mapKeys(jparse(jsonString)) AS keysThe above query will extract only the outermost keys from the JSON string within the jsonString attribute. After running the query, keys will contain the list ['value1', 'value2'].

Use the mapValues() function to return a list of values when provided a map as input within the attribute parameter.

WITH '{"userResult1": 100, "userResult2": 200, "userResult3": 4}' AS jsonString SELECT mapValues(jparse(jsonString)) AS valuesThe above query:

- Deserializes the JSON string within the

jsonStringattribute into a map using thejparse()function - Calls the

mapValues()function to extract a list of all the values within this map - Binds this list of values to the

valuesattribute

After running the above query, values will contain the list [100, 200, 4].

WITH '{"value1": "test", "value2": {"nestedValue1": [1, 2, 3], "nestedValue2": 100}}' AS jsonString SELECT mapValues(jparse(jsonString)) AS valuesThe above query extracts the outermost values from the JSON string within the jsonString attribute. After running the query, values will contain a list of the "test" string and nested object.

This can be seen in the JSON view:

"contents": [ { "function": "alias", "alias": "values", "contents": { "constant": [ "test", { "nestedValue1": [ 1, 2, 3 ], "nestedValue2": 100 } ] } }],Use the minuteOf() function to extract only the minute portion (that is, minutes 0 to 59) of an attribute holding a valid timestamp value. This also works for functions like hourOf(), weekOf(), and so on. For a full list of time-based functions, see the table in our group results across time doc