Cassandra monitoring integration

Our Cassandra integration sends performance metrics and inventory data from your Cassandra database to the New Relic platform. You can view pre-built dashboards of your Cassandra metric data, create alert policies, and create your own custom queries and charts.

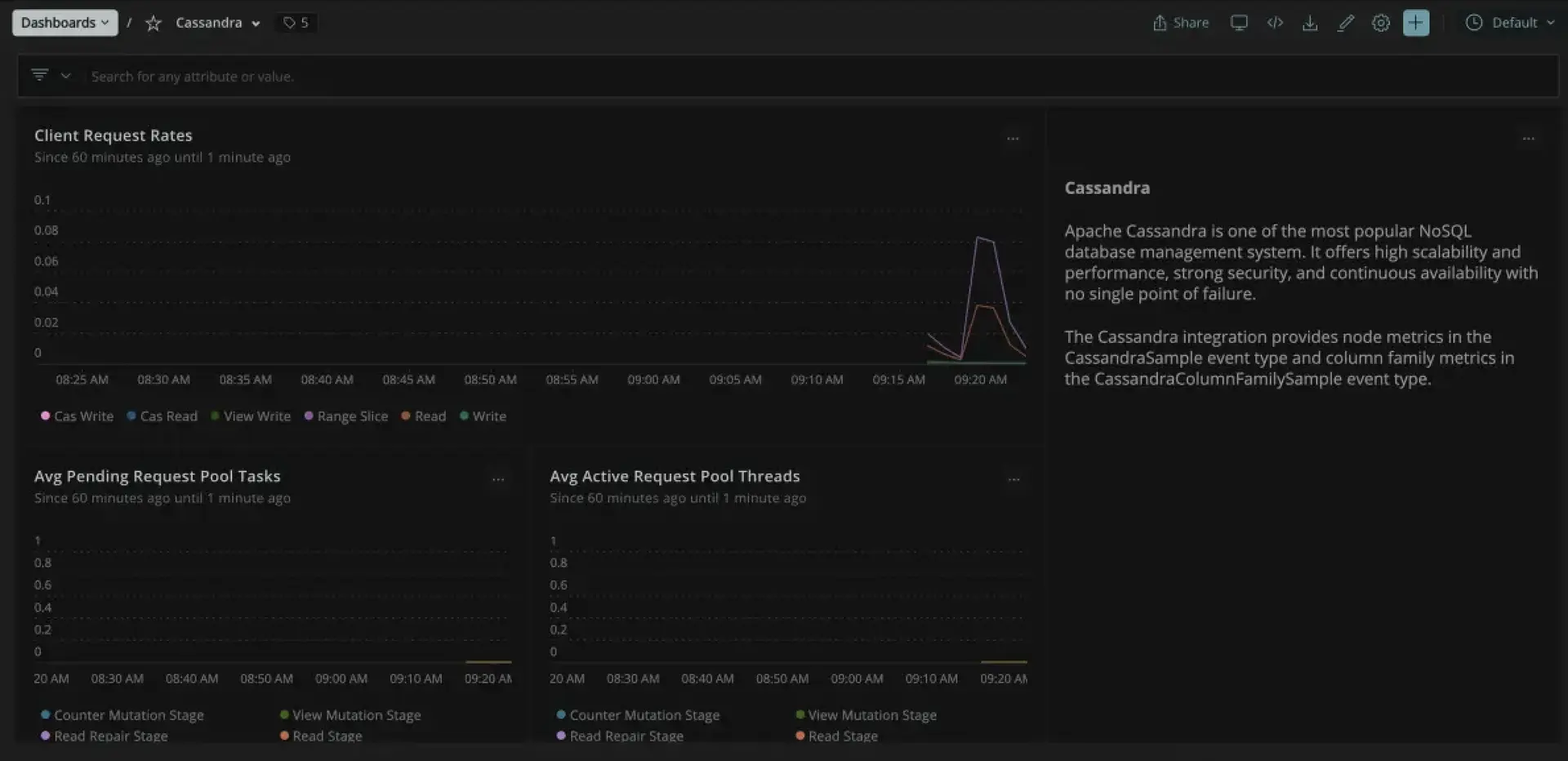

Dashboard installed through the New Relic Cassandra Monitor quickstart

Read on to install the integration and to see what data we collect.

Tip

Instrument your Cassandra database quickly and send your telemetry data with guided install. Our guided install uses our infrastructure agent and our CLI to set up the Cassandra integration, and discovers other applications and log sources running in your environment and then recommends which ones you should instrument. The guided install works with most setups. But if it doesn't suit your needs, there are other install options below.

Ready to get started? Click the relevant button, depending on which data center region you use. When you're done with the install, return to this documentation to review the configuration options.

For a more permanent and scalable solution, we recommend the standard manual install of the agent: keep reading for how to do that.

Important

This integration doesn't automatically update. For best results, regularly update the integration package and the infrastructure agent.

Configuration options

For the standard on-host installation, this integration comes with a YAML config file, cassandra-config.yml. This configuration is where you can place required login credentials and configure how data is collected. Which options you change depends on your setup and preferences. It comes with a sample config file cassandra-config.yml.sample that you can copy and edit.

Specific settings related to Cassandra are defined using the env section of the configuration file. These settings control the connection to your Cassandra instance as well as other security settings and features. The list of valid settings is described in the next section of this document.

Cassandra configuration options

The Cassandra integration collects both metrics(M) and inventory(I) information. In the table, use the Applies To column for the settings available to each collection:

Setting | Description | Default | Applies to |

|---|---|---|---|

| Hostname or IP where Cassandra is running. |

| M/I |

| Port on which Cassandra is listening. |

| M |

| Username for accessing JMX. | N/A | M |

| Password for the given user. | N/A | M |

| Path to the Cassandra configuration file. |

| I |

| Limit on number of Cassandra Column Families. |

| M |

| Request timeout in milliseconds. |

| M |

| The filepath of the keystore containing the JMX client's SSL certificate. | N/A | M |

| The password for the JMX SSL key store. | N/A | M |

| The filepath of the trust store containing the JMX client's SSL certificate. | N/A | M |

| The password for the JMX SSL trust store. | N/A | M |

Enable multi-tenancy monitoring. |

| M/I | |

| Set to |

| |

| Set to |

|

You can define the values for these settings in several ways:

- Adding the value directly in the config file. This is the most common way.

- Replacing the values from environment variables using the

{{}}notation. This requires infrastructure agent v1.14.0+. Read more here or see the example below. - Using secrets management. Use this to protect sensitive information, such as passwords that would be exposed in plain text on the configuration file. For more information, see Secrets management.

Labels

You can further decorate your metrics using labels. Labels allow you to add attributes (key/value pairs) to your metrics, which you can then use to query, filter, or group your metrics.

Our default sample config file includes examples of labels but, because they're not mandatory, you can remove, modify, or add new ones of your choice.

labels: env: production role: load_balancerExample configurations

Here are some example YAML configurations:

Metric data

Cassandra node metrics are attached to the CassandraSample event type. The Cassandra integration collects these node metrics:

Name | Description |

|---|---|

| Total amount of bytes stored in the memtables (2i and pending flush memtables included) that resides on-heap. |

| Total amount of bytes stored in the memtables (2i and pending flush memtables included) that resides off-heap. |

| The number of commit log messages written per second. |

| Number of commit log messages written but yet to be fsync’ed. |

| Current size, in bytes, used by all the commit log segments. |

| Dropped messages per second for this type of request. |

| Key cache capacity in bytes. |

| One-minute key cache hit rate. |

| Number of key cache hits per second. |

| Number of requests to the key cache per second. |

| Size of occupied cache in bytes. |

| Number of SSTables on disk for this column family. |

| Size, in bytes, of the on disk data size this node manages. |

| Row cache capacity in bytes. |

| One-minute row cache hit rate. |

| Number of row cache hits per second. |

| Number of requests to the row cache per second. |

| Total size of occupied row cache, in bytes. |

| Number of tasks being actively worked on by this pool.

|

| Number of queued tasks queued up on this pool. |

| Number of tasks completed. |

| Number of tasks that were blocked due to queue saturation. |

| Number of tasks that are currently blocked due to queue saturation but on retry will become unblocked. |

| Number of hints currently attempting to be sent. |

| Number of hint messages per second written to this node. Includes one entry for each host to be hinted per hint. |

| Transaction read latency in requests per second. |

| Transaction write latency in requests per second. |

| Number of range slice requests per second. |

| Number of timeouts encountered per second when processing token range read requests. |

| Number of unavailable exceptions encountered per second when processing token range read requests. |

| Read latency in milliseconds, 50th percentile. |

| Read latency in milliseconds, 75th percentile. |

| Read latency in milliseconds, 95th percentile. |

| Read latency in milliseconds, 98th percentile. |

| Read latency in milliseconds, 999th percentile. |

| Read latency in milliseconds, 99th percentile. |

| Number of read requests per second. |

| Number of timeouts encountered per second when processing standard read requests. |

| Number of unavailable exceptions encountered per second when processing standard read requests. |

| Number of view write requests per second. |

| Write latency in milliseconds, 50th percentile. |

| Write latency in milliseconds, 75th percentile. |

| Write latency in milliseconds, 95th percentile. |

| Write latency in milliseconds, 98th percentile. |

| Write latency in milliseconds, 999th percentile. |

| Write latency in milliseconds, 99th percentile. |

| Number of write requests per second. |

| Number of timeouts encountered per second when processing regular write requests. |

| Number of unavailable exceptions encountered per second when processing regular write requests. |

Cassandra column family metrics and metadata

The Cassandra integration retrieves column family metrics. Cassandra column family data is attached to the CassandraColumnFamilySample event type. It skips system keyspaces (system, system_auth, system_distributed, system_schema, system_traces and OpsCenter). To limit the performance impact, the integration will only capture metrics for a maximum of 20 column families.

The following metadata indicates the keyspace and column family associated with the sample metrics:

Name | Description |

|---|---|

| The Cassandra column family these metrics refer to. |

| The Cassandra keyspace that contains this column family. |

| The keyspace and column family in a single metadata attribute in the following format: |

The list of metrics below refer to the specific keyspace and column family specified in the metadata above:

Name | Description |

|---|---|

| Total number of bytes stored in the memtables (2i and pending flush memtables included) that resides off-heap. |

| Total number of bytes stored in the memtables (2i and pending flush memtables included) that resides on-heap. |

| Disk space in bytes used by SSTables belonging to this column family (in bytes). |

| Number of SSTables on disk for this column family. |

| Estimate of number of pending compactions for this column family. |

| Number of sstable data files accessed per read, 50th percentile. |

| Number of sstable data files accessed per read, 75th percentile. |

| Number of sstable data files accessed per read, 95th percentile. |

| Number of sstable data files accessed per read, 98th percentile. |

| Number of sstable data files accessed per read, 999th percentile. |

| Number of sstable data files accessed per read, 99th percentile. |

| Local read latency in milliseconds for this column family, 50th percentile. |

| Local read latency in milliseconds for this column family, 75th percentile. |

| Local read latency in milliseconds for this column family, 95th percentile. |

| Local read latency in milliseconds for this column family, 98th percentile. |

| Local read latency in milliseconds for this column family, 999th percentile. |

| Local read latency in milliseconds for this column family, 99th percentile. |

| Number of read requests per second for this column family. |

| Local write latency in milliseconds for this column family, 50th percentile. |

| Local write latency in milliseconds for this column family, 75th percentile. |

| Local write latency in milliseconds for this column family, 95th percentile. |

| Local write latency in milliseconds for this column family, 98th percentile. |

| Local write latency in milliseconds for this column family, 999th percentile. |

| Local write latency in milliseconds for this column family, 99th percentile. |

| Number of write requests per second for this column family. |

Inventory

The integration captures configuration options defined in the Cassandra configuration and reports them as inventory data in the New Relic UI.

System metadata

The Cassandra integration also collects these attributes about the service and its configuration:

Name | Description |

|---|---|

| The Cassandra version. |

| The name of the cluster this Cassandra node belongs to. |

Check the source code

This integration is open source software. That means you can browse its source code and send improvements, or create your own fork and build it.